What if the most transformative technology in a company’s arsenal was also its biggest blind spot? Across countless organizations, generative AI is silently embedding itself into everyday SaaS tools—think chat summaries in messaging apps or automated transcriptions in video platforms. This invisible surge promises efficiency, but for security leaders, it poses a stark challenge: how can risks be managed when AI spreads across a tech stack without clear oversight? This question is no longer hypothetical; it’s a pressing reality demanding immediate attention.

The significance of this issue cannot be overstated. As AI becomes ubiquitous in SaaS environments, the absence of governance threatens to turn innovation into vulnerability. Data leaks, compliance violations, and operational errors are just a few of the potential pitfalls. For those tasked with safeguarding organizational security, understanding and implementing AI governance is not just a strategic advantage—it’s a necessity to protect sensitive information and maintain trust in an increasingly AI-driven landscape.

The Hidden Rise of AI in SaaS Ecosystems

Generative AI isn’t waiting for permission to transform workplaces; it’s already woven into the fabric of commonly used SaaS applications. From productivity suites offering AI-driven writing aids to collaboration tools summarizing discussions, vendors are racing to integrate these capabilities. This rapid adoption means that many businesses are operating with AI tools they may not even fully recognize as part of their systems, creating a sprawling network of unmonitored functionalities.

The pace of this integration is staggering. A survey reveals that 95% of U.S. companies are now utilizing generative AI, a figure that underscores the technology’s pervasive reach. Yet, this silent infiltration often bypasses traditional IT oversight, leaving security teams scrambling to map out where and how AI operates within their environments. The lack of centralized control amplifies the urgency for structured approaches to manage these tools effectively.

Unpacking the Dangers of Unregulated AI in SaaS

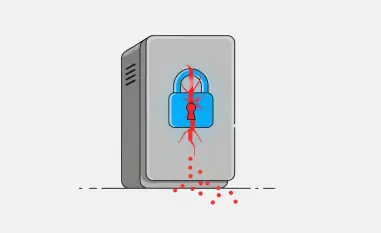

With AI’s widespread adoption comes a host of risks that cannot be ignored. Data security and privacy stand out as primary concerns, especially when sensitive information is processed by AI tools without proper vetting. High-profile cases, such as major financial institutions restricting platforms like ChatGPT after unintended data exposures, illustrate the tangible threats posed by unchecked AI usage in corporate settings.

Beyond data leaks, the potential for compliance breaches looms large. When employees use unapproved AI features, they may inadvertently violate stringent regulations like GDPR or HIPAA, exposing their organizations to hefty fines and reputational damage. For security leaders, the stakes are clear: without a framework to govern AI, the very tools meant to boost productivity could become conduits for costly liabilities.

Why AI Governance Is Essential for SaaS Security

AI governance, encompassing the policies and controls that ensure responsible usage, serves as the backbone for balancing innovation with protection in SaaS landscapes. One critical reason is the risk of data exposure—AI often requires access to extensive datasets, and without strict oversight, confidential information can be funneled to external models. Recent findings indicate that 27% of organizations have banned generative AI due to such privacy concerns, highlighting the gravity of this issue.

Another pressing factor is compliance with evolving legal standards. Shadow AI usage can lead to violations of global laws, especially as frameworks like the EU’s AI Act introduce stricter requirements starting from 2025 onward. Additionally, operational integrity is at stake when AI systems produce biased or erroneous outputs, such as flawed financial predictions. Governance mitigates these risks, fostering ethical AI practices that can enhance customer trust and provide a competitive edge.

Challenges in Taming AI Within SaaS Frameworks

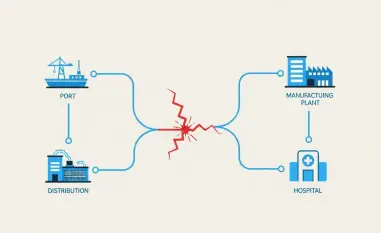

Governing AI in SaaS environments is far from straightforward, with visibility emerging as a significant hurdle. Security teams often lack a comprehensive view of AI tools in use, particularly when employees independently adopt solutions without approval. These shadow instances create hidden data flows that evade traditional security measures, making it nearly impossible to secure what remains unseen.

Further complicating matters is the fragmented ownership of AI initiatives across departments. Marketing might deploy an AI content generator while engineering tests a coding assistant, each with varying security protocols—or none at all. This disjointed approach, coupled with the opaque nature of AI data interactions, often referred to as a “black box,” hinders accountability. Real-world incidents of proprietary data surfacing in public AI outputs serve as stark warnings of the need for robust oversight mechanisms.

Practical Steps to Build Strong AI Governance in SaaS

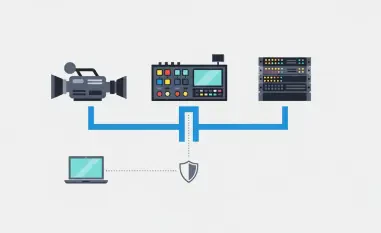

For security leaders, establishing AI governance is achievable through targeted strategies that prioritize safety without stifling progress. Begin with a thorough inventory of all AI tools and features within the SaaS stack, documenting their purpose, users, and data access. This audit, though time-consuming, lays the groundwork for understanding the full scope of AI presence, including hidden or embedded functionalities.

Next, develop and communicate clear usage policies tailored to AI, specifying permissible applications and prohibited actions, such as inputting customer data into unvetted tools. Monitoring access through the principle of least privilege, conducting regular risk assessments to address emerging threats, and fostering collaboration across IT, legal, and business units are also vital steps. These measures ensure that governance evolves with the technology, enabling organizations to leverage AI’s benefits while minimizing exposure to its dangers.

Reflecting on the Path Forward for AI Governance

Looking back, the journey to integrate AI into SaaS environments revealed both its transformative potential and its inherent risks. Security leaders who tackled the challenge head-on found that governance was not merely a defensive measure but a strategic enabler, allowing innovation to flourish within safe boundaries. The lessons learned underscored that ignoring AI’s spread was never an option; proactive management was the only sustainable path.

Moving ahead, the focus shifts toward refining these governance frameworks, ensuring they adapt to new AI advancements and regulatory shifts. Embracing automated tools to track and manage AI usage emerges as a practical next step, reducing manual burdens on security teams. By prioritizing continuous improvement and cross-departmental alignment, organizations position themselves to navigate the AI landscape with confidence, turning potential vulnerabilities into strengths for the long haul.