Malik Haidar, a seasoned cybersecurity expert with a profound understanding of threats and hackers within multinational corporations, shares his insights on AI security. With expertise that extends into analytics, intelligence, and a keen business-focused approach to cybersecurity strategies, Malik discusses the nuances of deploying AI systems and the importance of thorough security measures.

What are some critical questions organizations often overlook before deploying an AI system?

Organizations frequently miss foundational questions such as where an AI model will be deployed, the type of inputs it will process, and potential business risks. Without upfront consideration of these aspects, a significant understanding of how AI integrates into a corporation can be lost, affecting both efficiency and security.

Why is it important to understand where the AI model is deployed and what kind of inputs it will process?

Understanding the deployment context and input types is crucial because it influences how the AI will interact with its environment and users. Variations in input can lead to vulnerabilities, and knowing these details upfront mitigates risks and allows for tailored security solutions.

How do “model cards” contribute to understanding AI models, and what are their limitations?

Model cards provide some insight into an AI model’s capabilities and limitations, offering a snapshot of its features. However, the lack of standardization in measurements across the industry means they can’t always be relied upon for comprehensive security assessments.

Can you explain the difference between safety and security in the context of AI models?

Safety focuses on preventing harm or failures within the intended operation of AI systems, while security involves protecting these systems from external threats and malicious actions that exploit their capabilities or result in unintended operations.

What does “red teaming” mean in the context of AI, and why is it more than just internal QA or prompt testing?

Red teaming in AI involves a comprehensive analysis that goes beyond basic QA or prompt testing. It’s about engaging with the AI system’s technical and non-technical vulnerabilities through adversarial approaches, exposing how systems can be compromised beyond internal assessments.

How does red teaming differ from traditional cybersecurity assessments?

Traditional cybersecurity assessments often focus on known vulnerabilities and compliance checks, while red teaming simulates adversarial attacks that test both technical infrastructures and human factors, offering a much broader attack surface evaluation.

Why is a deep understanding of the AI system crucial for effective red teaming?

A thorough understanding of the AI system is vital as today’s models are complex and can handle multiple types of inputs that potentially introduce vulnerabilities. Recognizing these intricacies allows for a more targeted and effective red teaming process, ensuring vulnerabilities are identified and addressed.

Can you give examples of unique attacks such as the “Stop and Roll” or “Red Queen Attack” on AI models?

Attacks like “Stop and Roll” involve bypassing security through prompt interruption, while the “Red Queen Attack” uses multi-turn role-play to conceal malicious intent. These highlight the vulnerabilities in AI’s communication processes and the subtlety with which these systems can be exploited.

How do attackers exploit differences in input tokens, like uppercase versus lowercase or unicode versus non-unicode characters?

Attackers take advantage of the way large language models process inputs by using variations in tokens such as uppercase versus lowercase or unicode versus non-unicode characters. These seemingly minor differences can lead to unpredictable behaviors and security breaches.

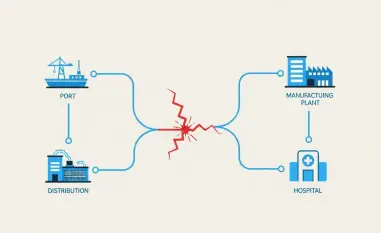

What steps can organizations take to safeguard and create robust guardrails for AI systems?

To safeguard AI systems, organizations should implement layered security strategies, regular system audits, and continuous monitoring. Establishing robust guardrails involves understanding the system’s environments and maintaining adaptability to emerging threats.

What are the pros and cons of building in-house AI security solutions versus buying existing tools?

Building in-house AI security solutions allows for customization to specific needs but can be resource-intensive. Buying existing tools offers quicker implementation and comprehensive support but might lack the precise alignment with unique organizational requirements.

How can frameworks from organizations like Microsoft, OWASP, and NIST aid in evaluating AI security solutions?

Frameworks from entities like Microsoft, OWASP, and NIST provide structured guidelines to assess and benchmark AI security solutions, aiding organizations in identifying critical vulnerabilities and implementing best practices in risk mitigation strategies effectively.

What open-source or paid platforms are available for AI security, and what are their main features?

Open-source platforms like Promptfoo and Llama Guard provide frameworks for evaluating AI model safety, while paid platforms such as Lakera and Robust Intelligence offer advanced, real-time, content-aware security features aimed at safeguarding AI applications more comprehensively.

Why is it important to log metrics such as temperature, top P, and token length when deploying AI systems?

Logging specific metrics like temperature, top P, and token length is crucial because they help in understanding the operational changes and debugging the distributed processes of AI, ensuring that deviations from expected behaviors are promptly identified and addressed.

How does red teaming help organizations understand the behavior of AI systems?

Red teaming forces organizations to explore AI systems deeply, simulating potential attacks and thereby revealing how the systems react under pressure. This understanding helps in defining clear security measures and predicting behavior in real-world scenarios.

What mindset should organizations adopt regarding AI security?

AI security should be seen as an ongoing responsibility rather than a one-off task. Organizations need to embrace a proactive mindset, focusing on understanding potential risks and adapting to evolving threats, ensuring a dynamic approach to security.

How can red teaming clarify the outcomes organizations want to achieve with AI systems?

Red teaming helps organizations explicitly define both desired and undesired outcomes by exposing potential vulnerabilities and prompting consideration of potential failures, allowing for adjustments to align AI functionalities with organizational goals.

What future topics can we expect to be covered in this series about securing generative AI?

In upcoming discussions, expect to delve deeper into compliance aspects and technical strategies for securing AI, how regulation influences security practices, and exploring new methods to enhance the resilience and integrity of generative AI systems against advanced attacks.