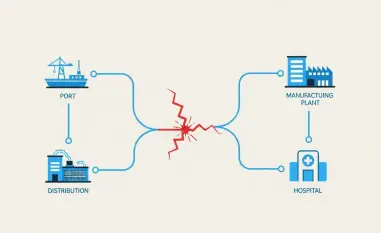

A significant disparity is emerging in the landscape of technological advancement, where the very sectors that stand to gain the most from artificial intelligence are paradoxically the slowest to embrace it. For organizations in highly regulated fields such as banking, healthcare, government, and aerospace, the promise of AI-driven operational efficiency and competitive advantage is often overshadowed by a formidable barrier: compliance. The core of this issue lies not in a lack of willingness to innovate but in a fundamental architectural mismatch between modern AI platforms and the stringent security and data handling requirements these industries must uphold. While a recent survey indicates that roughly seven in ten organizations have integrated AI into their support operations, a closer look reveals a telling divide. An overwhelming 92% of technology companies have deployed AI for customer support, whereas only 58% of their counterparts in regulated sectors have managed to do the same. This gap highlights a critical reality that the standard deployment models for AI, predominantly built for agility and scale in less restrictive environments, are often incompatible with the non-negotiable compliance mandates that govern sensitive industries.

1. How Security and Compliance Hinder AI Implementation

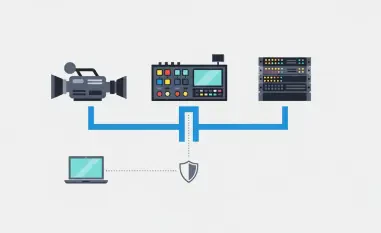

Many AI platform vendors attempt to bridge the adoption gap by emphasizing their comprehensive security features, yet this approach often overlooks the foundational architectural problem that truly concerns regulated organizations. The standard deployment model for most AI-enabled platforms involves a distributed architecture where, for instance, a company’s primary help desk software runs in a public cloud environment like AWS or Azure, while the associated AI capabilities operate on a completely separate part of that cloud or even on a different cloud provider’s infrastructure. In this common setup, data frequently flows between these distinct services in an unencrypted state. While the robust cybersecurity posture of major public cloud providers is sufficient for a wide range of businesses, this model presents an insurmountable compliance and security hurdle for organizations bound by strict data sovereignty laws or internal governance policies. The unmonitored movement of unencrypted data across public cloud services is a direct violation of the principles that underpin regulatory frameworks in sectors handling sensitive financial, health, or government information, making adoption an unacceptable risk.

2. The Critical Role of IT Security Teams in Purchasing Decisions

The divergence in AI adoption rates between technology firms and regulated entities is a direct reflection of their fundamentally different approaches to risk management and security. Technology companies frequently adopt a reactive security posture, prioritizing rapid deployment of cutting-edge tools to gain a market edge, and then addressing any emerging security vulnerabilities as they are identified. They rely heavily on the inherent security infrastructure of their public cloud providers. In stark contrast, organizations in regulated industries operate under a proactive and far more rigorous security mandate. For them, meeting strict compliance requirements is a prerequisite for any new system going live, not an afterthought. This operational difference significantly influences the procurement process. Within regulated industries, a majority (56%) of organizations classify AI security as a critical purchasing factor, and in an overwhelming 78% of cases, IT or security teams are integral to the final decision-making process, effectively granting them veto power over any potential platform acquisition that fails to meet their exacting standards.

This level of scrutiny from security teams severely limits the available options for AI-enabled platforms. When these teams conduct their evaluations, they are typically confronted with a difficult choice: either select a modern, cloud-native solution that offers advanced AI features but implicitly requires unencrypted data to traverse external systems, or opt for an on-premises solution that may offer greater control but comes with prohibitively expensive and often limited AI capabilities. Consequently, more than half (53%) of organizations currently in the pilot or evaluation phase of an AI deployment are focusing their efforts primarily on defining exhaustive security and compliance requirements before they even consider committing to a vendor. They proceed with deliberate caution because the consequences of a poor decision are severe, ranging from substantial regulatory fines and operational shutdowns to irreparable reputational damage. While a tech company might ask, “Will this improve our operations?” a regulated organization must add a critical follow-up: “Can we use this and remain compliant?” If the answer to the second question is anything but a definitive yes, the purchase is invariably declined.

3. Five Key Considerations for Evaluating AI Platforms in Regulated Fields

For organizations navigating the complexities of AI adoption within a regulated framework, a more discerning evaluation process is essential. It is crucial to move beyond vendor marketing claims and scrutinize the underlying architecture of any potential AI-enabled platform. One of the first criteria to consider is the hosting environment of the AI foundation models. Does the vendor operate its AI services within its own dedicated, private data centers, or does it rely on services hosted in the public cloud, where data control and residency become more ambiguous? Another vital consideration is deployment flexibility. A platform that can be deployed within an organization’s own virtual private cloud (VPC) or on-premises data center offers far greater security and control than one restricted solely to the vendor’s public cloud infrastructure. This flexibility allows the organization to extend its existing security perimeter to encompass the AI services, ensuring that data processing adheres to internal governance policies. These initial architectural questions set the stage for a deeper dive into the platform’s capabilities and its alignment with specific compliance needs, forming the basis for a more informed and secure technology investment.

Beyond the physical and virtual location of the AI services, organizations must assess the degree of control they have over the AI models themselves and the data they process. A key question is whether the platform supports AI model selection. The ability to integrate an AI provider or model of your choice, rather than being locked into the vendor’s preselected foundation model, provides critical flexibility to meet specific security and performance requirements. Furthermore, it is imperative to verify that the platform can meet all data sovereignty mandates. This means confirming that all data, both at rest and in transit, will remain within the required geofenced area or security perimeter, preventing unauthorized cross-border data flows. Finally, a thorough evaluation of the platform’s governance and visibility capabilities is non-negotiable. Organizations must confirm that they have access to comprehensive tools that provide a clear view of all data processing activities and can maintain immutable audit trails. These features are essential for demonstrating compliance to regulators and for conducting internal security audits, ensuring that the organization can always account for what is happening with its data.

4. The Future of AI Adoption An Architectural Imperative

The adoption gap that separated technology companies from regulated industries was not a permanent fixture but a market signal that pointed to a significant, unaddressed need. It reflected a period where vendors had largely developed solutions tailored to one segment of the market, leaving the complex challenges of another group unresolved. This pattern was a familiar one in the history of enterprise software. Ultimately, the latent demand from the neglected market grew large enough that platform providers were compelled to either adapt their offerings or risk being displaced by new competitors who built their solutions with compliance at their core. The organizations that successfully rethought their deployment architecture to support secure, compliant AI implementation went on to establish the definitive standard for how enterprises deployed AI-enabled tools and platforms moving forward. With nearly three-quarters of organizations having planned to increase their focus on AI security over the subsequent two years, it became clear that the market was poised for this fundamental shift, signaling a new era where security was no longer a feature but the foundation of AI strategy.