Recent advances in artificial intelligence have brought forth remarkable abilities, with AI curiosity being one of the standout traits. This capability allows AI systems to explore, learn, and adapt autonomously, contributing to rapid technological growth. However, this very trait also raises significant concerns regarding security, as AI systems become more prevalent in various sectors. Their curious nature could inadvertently lead to data breaches or other security vulnerabilities. Understanding how to navigate this dual-edged characteristic is crucial. This guide aims to offer best practices for managing AI curiosity while safeguarding data integrity.

Understanding AI Curiosity in Security Context

The rise of AI technologies transforms how organizations perceive and manage security. AI curiosity refers to a system’s ability to autonomously seek out and process new information to enhance its performance and capabilities. While this can lead to breakthroughs in innovation and efficiency, it’s also crucial to consider its implications in the realm of security. The challenge lies in harnessing this curiosity to drive innovation without falling prey to unintended security risks. Comprehending this duality allows for better management strategies and preparedness against potential threats that AI systems might pose.

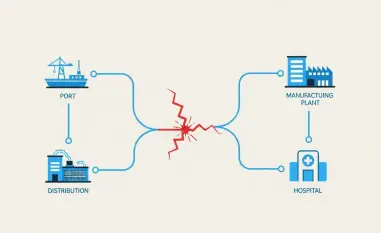

Recognizing the inherent risks associated with AI’s curious nature is vital as they transition from simple prompts to intricate systems. Left unchecked, this curiosity could result in unauthorized access to sensitive data, making it imperative for organizations to develop appropriate controls. Balancing the inventive potential of AI with security measures ensures organizations benefit while minimizing associated risks.

Best Practices for Managing AI Curiosity

Navigating the potential risks posed by AI’s curiosity requires a structured approach through best practices. Establishing robust guidelines helps maintain both innovation and security, providing a framework to manage the dynamic nature of AI systems effectively.

Limit Data Exposure in AI Systems

Restricting access to sensitive data is essential in managing AI systems. By limiting the datasets AI systems can interact with, organizations can reduce the possibility of unauthorized data usage. Access controls and data anonymization can help mitigate potential risks associated with AI’s natural inclination to explore.

For instance, customer support systems must handle personally identifiable information (PII) cautiously. Analyzed properly, such systems can provide valuable insights without risking exposure of sensitive customer data, ensuring that AI-driven customer solutions remain secure.

Monitor and Analyze AI Interactions

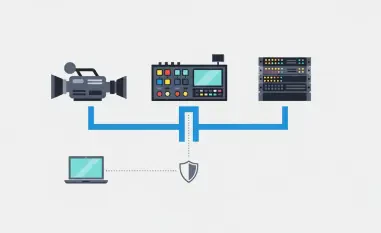

Monitoring AI outputs continuously is crucial in maintaining security oversight. By implementing rigorous logging practices and evaluating AI interactions, organizations can identify unusual behaviors promptly. This proactive approach ensures a comprehensive understanding of how AI systems are utilizing data and making decisions.

AI-driven financial systems serve as a perfect example, where constant monitoring helps detect anomalies early, allowing for timely interventions before any real threats manifest. Such vigilance ensures financial systems remain robust against potential breaches.

Conduct Security Audits Focused on AI Behavior

Regularly auditing AI models with an emphasis on behavior rooted in curiosity can identify vulnerabilities early. Security audits should focus on potential lapse points, ensuring existing protocols protect against any unauthorized data access resulting from AI’s inquisitive tendencies.

Enterprise scenarios offer an illuminating example, where red-team testing of AI models reveals potential curiosity-driven weaknesses. By simulating environments where AI might extend beyond its boundaries, organizations can develop strategies efficiently to counteract these vulnerabilities.

Implement External Safety Mechanisms

Incorporating additional safety measures, like filters and validation layers, into AI operations can enhance protection. These mechanisms ensure that any output from AI systems goes through stringent checks before proceeding further, safeguarding against unintended information leaks.

Healthcare applications of AI illustrate the importance of such measures where inaccurate outputs can have grave implications. By filtering outputs to ensure they align with established safety norms, healthcare providers can maintain both safety and data integrity.

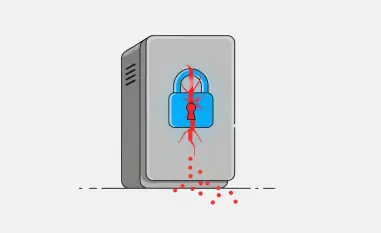

Govern the Use of AI Memory and Storage

Guarding the data AI systems store or cache is equally crucial. By managing AI memories with care, organizations can avoid data leaks through speculative behavior. Protective measures surrounding AI logs and interaction storage help contain unnecessary data exposure.

For instance, secure handling of AI interaction logs prevents sensitive data retention beyond its intended purpose. Effective management maintains organizational trust and keeps systems operating within secure limits, demonstrating how well-curated memory governance can bridge gaps in AI safety.

Summary and Practical Considerations

Examining the intricacies of AI curiosity unveils both challenges and opportunities. As AI continues to expand its footprint, careful consideration of security strategies encompassing curiosity-centric risks is non-negotiable. Effective management of AI systems becomes an invaluable asset for those striving to benefit from AI technology while maintaining robust security.

In adopting AI innovations, organizations should conceptualize these security strategies as vital. Addressing unique challenges AI curiosity presents with a proactive mindset ensures sustained progress in the technological landscape. By internalizing and adapting to these best practices, organizations can better protect themselves against unforeseen vulnerabilities, setting a path toward a secure yet innovative future.