The relentless pace of cloud adoption has fundamentally altered the security landscape, rendering traditional vulnerability management practices obsolete and leaving many organizations dangerously exposed. In today’s dynamic cloud environments, where infrastructure can be deployed and dismantled in minutes, relying on periodic vulnerability scans is akin to trying to photograph a speeding train with a pinhole camera; the resulting picture is incomplete and misses the most critical moments of risk. Vulnerability management is no longer a simple cycle of identifying and patching known weaknesses. It has evolved into a complex, continuous process that must account for ephemeral workloads, managed services with shared responsibilities, and the intricate web of permissions and network paths that define cloud architecture. Simply knowing a Common Vulnerability and Exposure (CVE) exists within a software package is insufficient; the crucial question is whether that vulnerability represents a clear and present danger to the business, a question that can only be answered by understanding its full context within the live environment.

1. Comprehensive Asset Discovery and Cloud Coverage

A foundational principle of cybersecurity is that an organization cannot secure what it cannot see, a challenge that is exponentially magnified in sprawling, multi-cloud ecosystems. Therefore, a modern vulnerability management vendor must provide comprehensive and automatic asset discovery across all cloud environments, including virtual machines, containers, Kubernetes clusters, serverless functions, and managed services. This visibility cannot be a one-time snapshot; it must be continuous to keep pace with ephemeral workloads that may exist for only a few minutes—long enough to be exploited but too short to be caught by a weekly scan. The platform must operate seamlessly across major providers like AWS, Azure, and GCP, consolidating data into a single, unified view to eliminate the visibility gaps and operational friction caused by managing separate tools for each cloud. A failure to identify even a small fraction of the asset inventory creates a permanent blind spot, giving attackers an undefended entry point into the network.

To achieve this level of comprehensive visibility, modern solutions increasingly favor an agentless, cloud-native approach that connects directly to cloud provider APIs. This method allows for rapid, wide-ranging coverage across container registries, infrastructure-as-code (IaC) templates, and running workloads without the deployment and maintenance overhead of traditional agents. While agentless scanning provides essential breadth, some organizations may require deeper, process-level telemetry. In these cases, the platform should offer the flexibility to complement its agentless discovery with optional, lightweight sensors, such as those based on eBPF, to capture real-time workload behavior and enable runtime blocking capabilities. Furthermore, effective vulnerability management must begin before deployment. Integrating scanning capabilities directly into the CI/CD pipeline allows for the analysis of container images, IaC templates like Terraform and CloudFormation, and the software bill of materials (SBOM) to catch vulnerabilities and misconfigurations before they ever reach a production environment. This “shift-left” strategy is critical for preventing risks at their source.

2. Advanced Cloud-Native Detection Capabilities

The depth and sophistication of a vendor’s detection capabilities are paramount in identifying the full spectrum of cloud risks. A competent platform must go beyond basic operating system and package vulnerability detection to include specialized scanning for container images and registries, which are central to modern application development. It is also crucial for the tool to analyze Infrastructure as Code (IaC) templates to identify misconfigurations that could create security weaknesses in the deployed environment. A critical component of this process is the ability to produce or ingest a Software Bill of Materials (SBOM) in standard formats like SPDX or CycloneDX. An SBOM provides a complete inventory of all components within an application, including open-source libraries, base images, and other dependencies, which is essential for tracking supply chain risks and responding quickly when a new vulnerability is discovered in a widely used component. Without these multifaceted detection methods, an organization is left with an incomplete picture of its true risk posture.

The speed at which a platform can incorporate new threat intelligence and apply it to an environment is a key differentiator. Modern vendors should be able to process new CVEs from the National Vulnerability Database (NVD) within hours of their disclosure, not days or weeks. However, simply flagging a new CVE is no longer sufficient. The real value lies in immediately cross-referencing this information against multiple signals to determine its real-world threat level. This includes integrating with exploit likelihood data, such as the Exploit Prediction Scoring System (EPSS), and known-exploited vulnerability catalogs, like the one maintained by CISA. By combining vulnerability data with runtime context and external threat intelligence, the system can deliver timely, signal-rich triage that separates urgent, actively exploited threats from theoretical risks. This continuous reassessment ensures that the organization’s security posture is constantly updated in response to both environmental changes and the evolving threat landscape.

3. Contextual and Accurate Vulnerability Prioritization

For years, security teams have been overwhelmed by a deluge of alerts, a phenomenon often referred to as “CVE overload.” Traditional vulnerability management tools, which relied heavily on the Common Vulnerability Scoring System (CVSS), contributed significantly to this problem by flagging thousands of issues without providing a clear path for prioritization. With tens of thousands of new vulnerabilities disclosed annually, it has become evident that CVSS scores alone are poor predictors of actual exploitation. A high CVSS score on an asset that is isolated from the network and has no access to sensitive data may represent a far lower risk than a medium-severity vulnerability on an internet-facing server with elevated permissions. Consequently, modern solutions must move beyond this outdated model by incorporating a rich set of contextual signals. These signals include the runtime state of a workload, the identity permissions it possesses, its network reachability, and whether it has access to sensitive data stores.

This shift toward contextual prioritization fundamentally transforms how organizations manage risk. By analyzing whether a vulnerable package is actually loaded and running in memory, a platform can distinguish between a latent vulnerability and an active threat. Similarly, understanding the identity permissions of a compromised workload—what it can access and what actions it can perform—reveals its potential blast radius. When this internal context is combined with external threat intelligence, such as EPSS scores predicting the probability of weaponization and CISA KEV catalogs listing actively targeted CVEs, security teams can finally focus their efforts with precision. This integrated approach has been shown to dramatically reduce the number of urgent remediation tickets, often by over 95%, by filtering out the noise and highlighting the 2-5% of vulnerabilities that combine exploitability, reachability, and significant business impact. This allows engineering resources to be directed toward fixing the issues that truly matter.

4. Attack Path and Blast Radius Analysis

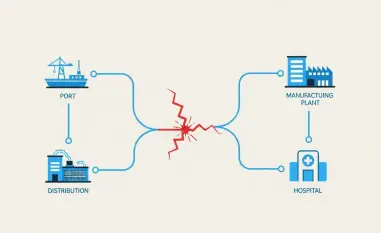

Understanding individual vulnerabilities is important, but seeing how they connect to form potential attack paths provides a much deeper level of security insight. Modern vulnerability management must therefore include attack path analysis, which visualizes how an attacker could move laterally through a cloud environment after an initial compromise. For instance, it can show how an attacker might pivot from a compromised container to other pods by exploiting Kubernetes RBAC permissions or leverage overly permissive IAM roles to gain access to sensitive data in S3 buckets. This analysis is also critical for identifying privilege escalation opportunities, such as when a vulnerable Lambda function with excessive execution roles could be used to gain administrative access across entire cloud accounts. By mapping these potential pathways, security teams can proactively identify and sever the connections that attackers would otherwise exploit.

Beyond charting the path, it is equally important to understand the potential consequences of a successful exploit. Visualizing the blast radius helps teams grasp the full scope of potential damage if an attacker gains access to a particular resource. This analysis is especially effective at identifying “toxic combinations,” which occur when a vulnerability is paired with other weaknesses, such as a network misconfiguration or excessive permissions. A medium-severity vulnerability might seem insignificant on its own, but when present on a server that is publicly accessible and has read/write access to a production database, it becomes a critical risk. It is important to note that this internal attack path analysis focuses on the potential damage an attacker can inflict once inside the perimeter. This function serves as a crucial input for a broader exposure management strategy, which then assesses which of these internal risks are reachable from the outside world.

5. Streamlined Remediation and Workflow Integration

Identifying vulnerabilities is only half the battle; the ultimate goal is remediation. The most effective vulnerability management platforms do more than just find problems—they provide clear, actionable guidance that helps development and operations teams fix them efficiently. Remediation advice should be precise, telling developers exactly which package to update, what line of code to change, or which configuration setting to adjust. A key capability is tracing vulnerabilities back to their source, whether in the source code or an infrastructure-as-code template. This enables true root-cause fixes rather than temporary runtime patches that may be overwritten in the next deployment. To further accelerate the process, the platform should be able to infer ownership by correlating cloud resource tags, Git repository metadata, and CI/CD pipeline configurations to automatically route findings to the responsible team. This is then formalized by integrating with ticketing systems like Jira or ServiceNow to create trackable tasks and enforce remediation service-level agreements (SLAs).

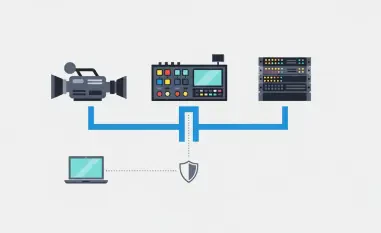

To ensure adoption and reduce friction between security and engineering teams, the vulnerability management tool must fit seamlessly into existing workflows. This means integrating directly with the tools developers use every day. By providing feedback on potential security issues directly within pull requests in code repositories, the platform empowers developers to address problems before they are merged into the main branch. This “DevSecOps-friendly” approach can be strengthened by implementing CI/CD policy enforcement, which acts as a security gate to block unsafe deployments from ever reaching production. Furthermore, the platform should integrate with the broader security ecosystem. Sharing data and context with Security Information and Event Management (SIEM) systems like Splunk and Security Orchestration, Automation, and Response (SOAR) platforms enables centralized alerting and automated response actions. This bidirectional context sharing with other security tools, such as Cloud Security Posture Management (CSPM) and Cloud Infrastructure Entitlement Management (CIEM), creates a unified view that reveals complex risks invisible to siloed solutions.

A More Strategic Path Forward

The selection of a vulnerability management vendor represented a critical decision point for organizations seeking to secure their cloud operations. The platforms that delivered the most value were those that moved beyond legacy scanning and provided a unified, context-aware view of risk. By prioritizing vendors that offered comprehensive asset discovery, advanced detection, and intelligent prioritization, companies were able to cut through the noise of excessive alerts. They focused their limited resources on the vulnerabilities that posed a genuine threat to their business. The integration of attack path analysis and streamlined remediation workflows empowered them to not only find but also fix security weaknesses with unprecedented speed and efficiency. This strategic investment in a modern vulnerability management solution proved to be a cornerstone of a resilient and effective cloud security program.