In the dynamic world of cybersecurity, Malik Haidar stands out as a leader in integrating business-savvy strategies into technical frameworks. With a strong background in tackling threats for multinational corporations, Malik’s insights offer a unique perspective on developing a robust cybersecurity architecture. This conversation delves into the intricacies of zero trust architecture, emphasizing the importance of a unified data strategy and efficient resource management.

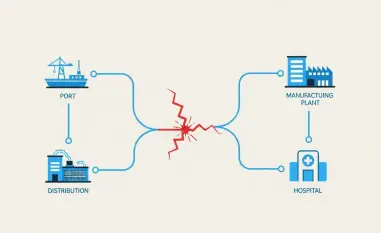

What are the main challenges that Federal agencies face with siloed tools and sprawling data when implementing zero trust architecture?

Federal agencies often struggle with integrating separate tools designed for different zero trust pillars—identity, device, network, application, and data. This fragmentation leads to inefficiencies as they try to unify these systems without losing sight of their intended security goals. The disparate nature of these tools can create barriers to sharing information across platforms, leaving agencies with a fragmented view of their security landscape.

How important is it for agencies to have a unified visibility and analytics layer across the five pillars of zero trust?

Achieving a comprehensive perspective across all pillars is crucial. A unified analytics layer allows agencies to glean insights from their data as a whole, rather than piecemeal. It simplifies the feedback loop necessary to manage a zero trust environment effectively, ensuring that agencies respond promptly to threats while optimizing their security operations.

What approach should agencies take to avoid the pitfalls of one-size-fits-all solutions in their zero trust strategies?

Agencies need to embrace interoperability without the urge to overhaul existing technologies. By adopting open standards for data sharing, like the Open Telemetry project, they can ensure that different systems communicate effectively. This approach maximizes the value of current investments and helps build a cohesive security architecture that capitalizes on existing tools.

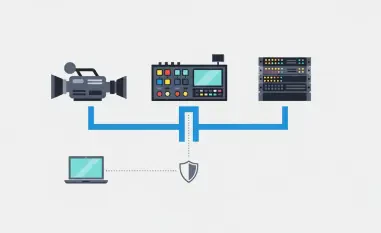

How can agencies make existing systems interoperable without having to replace old technology?

Interoperability can be achieved by layering new technologies that facilitate communication between existing tools. By using open standards, agencies can create a harmonized environment where data flows smoothly and securely between systems, enhancing overall efficiency while minimizing disruptions associated with adopting new technologies.

Why is embracing open standards crucial for data sharing in zero trust implementations?

Open standards are the backbone of seamless data integration across disparate tools. They ensure that data can be shared and utilized across various platforms without compatibility issues. This is especially important in a zero trust framework where the ability to quickly access and analyze data from multiple sources is essential for identifying and mitigating security threats.

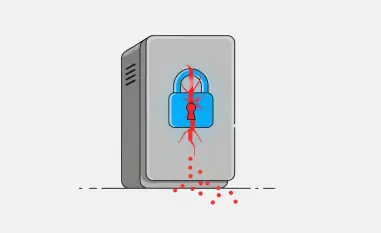

Could you explain how data unification serves as a foundational step in zero trust architecture?

Data unification consolidates various data sources into a single, accessible platform. This eliminates redundancies and enables efficient data processing and analysis, reducing costs associated with managing multiple data repositories. A unified data strategy transforms how agencies index and use their data, streamlining operations and enhancing security effectiveness.

How does consolidating data across multiple systems help reduce costs and eliminate overlapping capabilities?

By centralizing data management, agencies avoid duplicating efforts and paying multiple times for the same information. This consolidation reduces infrastructure requirements and integrates existing capabilities more effectively, ultimately cutting down operational costs and enhancing productivity.

What specific functionalities should a data platform have to be considered mission critical for zero trust?

A mission-critical data platform should support diverse environments, including multiple clouds and on-premises systems. It needs to facilitate real-time data analysis, ensuring security measures are proactive rather than reactive. The ability to operationalize data where it resides is key, along with the capability to search and retrieve data efficiently, regardless of its storage location.

How can Federal agencies effectively manage data residing in multiple clouds and on-premises environments?

Agencies should leverage platforms that offer a data mesh architecture, enabling them to deploy analytics capabilities directly where the data lives. This reduces costs associated with data migration and ensures that data remains accessible and secure, irrespective of its physical location.

Can you elaborate on Elastic’s data mesh architecture and its role in zero trust strategies?

Elastic’s data mesh architecture allows for the deployment of elastic clusters close to the data source, facilitating centralized searching and analysis. This approach minimizes the need for data duplication, enhancing efficiency while maintaining cost-effectiveness, and supports the comprehensive data strategy that a zero trust framework requires.

In what ways does Elastic’s platform offer cost advantages compared to traditional storage methods?

The platform uses a licensing model based on memory for indexing and searching data, rather than data volume. This approach provides significant cost savings by optimizing data storage and retrieval processes, further supported by strategies like logs compression and frozen tier storage, which reduce storage costs considerably.

How do Elastic’s licensing and storage strategies contribute to operational agility and cost control?

Elastic licenses its platform based on memory usage rather than data volume, providing a more flexible and cost-effective way to manage data storage. Their use of logs compression and frozen tier storage mechanisms offers additional savings, ensuring agencies maintain agility while controlling operational expenses.

What role do logs compression and frozen tier storage play in reducing data storage costs?

By compressing logs and utilizing frozen tier storage, agencies drastically cut storage expenses. Compression reduces the data size while preserving essential information, and frozen storage keeps rarely accessed data economically, focusing resources on frequently used data that demands quick retrieval.

Could you share a success story of an agency that effectively implemented zero trust architecture using Elastic?

Elastic’s implementation at the General Services Administration (GSA) demonstrates an effective zero trust strategy. By consolidating tools and indexing data once, GSA maintained its cybersecurity budget while enhancing security and user experience. This approach provides a model for efficiently balancing cost, security, and usability.

How did the General Services Administration maintain its cyber budget while enhancing its security posture with Elastic?

GSA optimized its budget by centralizing its tools and data, avoiding redundant logins, and improving its end-user experience. Such consolidation allowed GSA to meet zero trust requirements efficiently without inflating costs, underscoring the strategic advantage of using Elastic’s solutions.

What were the key improvements in the end user experience for GSA through implementing zero trust?

Implementing Elastic eliminated redundant logins, streamlining user access and enhancing overall experience. This not only simplified security protocols but also improved productivity by ensuring that users encountered fewer barriers when accessing necessary applications and resources.

How has Elastic been used to create a single threat-hunting platform in large Department of Defense enterprises?

Elastic enabled a unified threat-hunting platform capable of handling both enterprise-level and tactical security requirements. This consolidation provided a comprehensive view of threats, enhancing the Department of Defense’s ability to respond swiftly and effectively to potential security incidents.

Can you estimate the potential cost savings for agencies through smarter data strategies using Elastic?

Elastic’s smart data strategies can save a group of major agencies hundreds of millions of dollars annually. By prioritizing data unification and efficient storage, agencies significantly reduce operational costs while improving data accessibility and security.

What lessons can other agencies learn from GSA’s experience in implementing zero trust?

GSA’s success underscores the importance of strategic data management and tool consolidation. By focusing on interoperability and unified data strategy, agencies can maintain budget control while enhancing security measures, achieving a balanced and forward-thinking cybersecurity posture.

How can agencies move beyond compliance to future-proof their security posture with Elastic’s solutions?

Agencies should view compliance as a baseline rather than a goal. By investing in Elastic’s flexible and robust platform, they can stay ahead of emerging threats and ensure their security measures adapt to future challenges, securing their operations beyond mere compliance.