Imagine a widely-used AI-powered code editor, trusted by developers globally, suddenly becoming a gateway for malicious attacks due to critical security flaws. This alarming scenario became a reality with Cursor, a tool designed to streamline coding with artificial intelligence, when severe vulnerabilities were recently uncovered by multiple research teams. These flaws exposed users to risks like remote code execution and data breaches, shaking confidence in AI-driven development tools. This roundup dives into the perspectives of various security experts and industry voices to unpack the nature of these vulnerabilities, the patches implemented in version 1.3, and the broader implications for AI tool security. The goal is to synthesize diverse opinions and tips, offering a comprehensive look at how the industry is responding to such critical challenges.

Exploring the Security Crisis in Cursor: Why It Matters

Cursor has emerged as a go-to AI code editor for developers seeking efficiency through automation. However, recent discoveries by research groups have spotlighted significant security gaps that could jeopardize sensitive data and systems. Experts across the field agree that these flaws, which allowed attackers to manipulate configurations and execute unauthorized commands, highlight a pressing need for robust safeguards in AI tools. Their collective concern centers on the potential for widespread impact, given the tool’s adoption by individual coders and large organizations alike.

The consensus among security professionals is that the stakes are incredibly high. With developers relying on AI to handle complex tasks, any breach could ripple through entire codebases or compromise proprietary projects. Different voices emphasize varying aspects, from the technical intricacies of the exploits to the cultural tendency to prioritize convenience over caution. This roundup aims to capture these diverse angles, shedding light on why this issue resonates so deeply within the tech community.

A key point of discussion is the balance between innovation and protection. Some industry observers argue that the rapid integration of AI into development environments has outpaced the establishment of security protocols. Others see this as an opportunity to redefine best practices, pushing for stricter controls in tools like Cursor. These contrasting views set the stage for a deeper examination of the specific vulnerabilities and the lessons drawn from the latest patch.

Breaking Down the Vulnerabilities: Expert Perspectives

CurXecute and the Risks of Unchecked File Modifications

One of the most alarming flaws, identified as CVE-2025-54135 with a CVSS score of 8.6, involves Cursor’s lack of user approval for modifying sensitive MCP files. Dubbed “CurXecute” by researchers, this vulnerability enabled remote code execution through indirect prompt injection, where attackers could alter configuration files without user knowledge. Many security analysts point out that this flaw underscores a critical oversight in design, allowing malicious commands to be executed seamlessly.

A segment of experts highlights the technical ease with which attackers could exploit this gap. By embedding harmful instructions in seemingly benign data, malicious actors could bypass basic checks, gaining control over a developer’s environment. There’s a shared concern that such vulnerabilities could be chained with other exploits, amplifying the damage potential and making detection even harder.

Another perspective focuses on the philosophical debate surrounding automation in AI tools. Several industry commentators question whether the drive for efficiency has led to inadequate security measures. They argue that while automation is a cornerstone of modern coding tools, incidents like this reveal the urgent need for mandatory user intervention on critical actions, prompting a reevaluation of design priorities in software like Cursor.

Repository Exploits: When Trusted Code Becomes a Threat

Turning to CVE-2025-54136, with a CVSS score of 7.2, experts have weighed in on how attackers with repository write access could replace safe configurations with dangerous commands, evading user warnings. This flaw, often exploited through seemingly harmless files like READMEs, poses a real-world risk of data theft during repository cloning. Many in the security field stress that this highlights the danger of implicit trust in pre-approved permissions.

Some analysts draw attention to the practical implications, noting that developers often overlook the content of shared repositories, assuming safety based on familiarity or source. This vulnerability exploits that trust, turning collaborative tools into attack vectors. The discussion often circles back to the need for continuous validation mechanisms to catch malicious changes in real time.

Others in the community emphasize systemic solutions over user vigilance. They argue that relying on developers to spot malicious code is impractical in fast-paced environments. Instead, there’s a push for AI tools to embed dynamic checks that adapt to evolving threats, ensuring that even trusted sources are scrutinized before execution, thereby reducing the risk of exploitation.

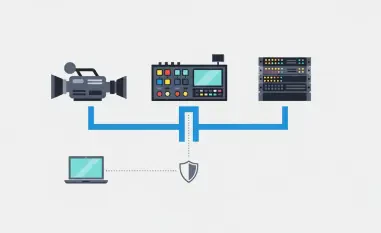

Auto-Run Mode: Automation’s Hidden Dangers

Cursor’s Auto-Run mode, designed to execute commands without permission prompts, has drawn sharp criticism for creating openings for attackers to embed malicious instructions in comment blocks. Security professionals note that this feature, while convenient, opens up novel attack surfaces in development workflows. The lack of user oversight in automated processes is frequently cited as a core issue.

A differing viewpoint among experts focuses on the broader trend of increasing autonomy in AI agents. As tools take on more decision-making roles, the potential for unintended consequences grows. Many warn that without stringent controls, automation can become a liability, especially when malicious inputs are processed without scrutiny in global coding environments.

There’s also a call for rethinking protective measures like user-defined denylists. Several industry voices contend that such static barriers are insufficient against sophisticated attacks. They advocate for deeper, systemic protections—such as runtime analysis or behavioral monitoring—to prevent automated exploits, urging tool developers to anticipate rather than react to threats.

Third-Party Integrations: Unseen Entry Points for Attacks

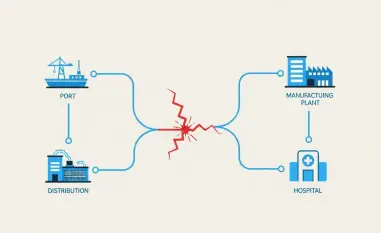

The vulnerability of third-party integrations, particularly MCP servers processing external content, has been a focal point for many experts. These integrations amplify risks of indirect prompt injection in tools like Cursor, as untrusted data can be exploited with little oversight. Analysts frequently compare this to similar challenges in other AI agents, noting a pattern of insufficient input filtering.

Some security specialists stress the cascading effects of such flaws. When external systems are linked to core tools, a single breach can compromise multiple layers of a developer’s ecosystem. This interconnectedness, while powerful for functionality, is seen as a double-edged sword that demands rigorous validation at every touchpoint.

Looking ahead, there’s speculation among thought leaders about the future of integrations in AI tools. With expansion comes the likelihood of new vulnerabilities tied to external dependencies. A common recommendation is for developers to prioritize preemptive strategies, such as sandboxing third-party content or limiting data access, to mitigate risks before they manifest into larger threats.

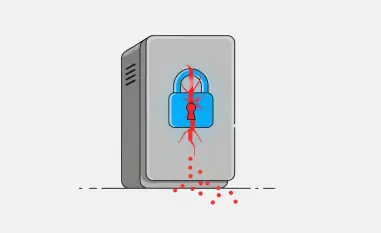

Key Takeaways from Cursor’s Security Patch: Collective Wisdom

The release of version 1.3, addressing these critical vulnerabilities, has prompted a wave of reflection among security experts. A major lesson echoed across the board is the glaring gap in user oversight and permission controls that Cursor initially exhibited. Many agree that the patch represents a step toward safer AI tools, but it also serves as a reminder of the need for constant vigilance in design and deployment.

Practical advice for developers emerges as a unifying theme. Recommendations include enforcing mandatory approvals for any sensitive action and implementing strict input validation to block malicious prompts. These steps, while seemingly basic, are viewed as foundational to preventing similar exploits in other AI-driven platforms, ensuring that functionality doesn’t compromise safety.

For organizations, the guidance often extends to broader strategies. Regular audits of third-party integrations and comprehensive training on prompt injection risks are frequently suggested. These measures aim to build a culture of security awareness, equipping teams to identify and respond to potential threats before they escalate into full-blown crises.

Navigating the Future of AI Tool Security: Industry Consensus

Reflecting on the patched flaws in Cursor, the industry response underscored a pivotal moment in AI tool security. Experts from various corners of the tech world came together to dissect the vulnerabilities, offering insights that ranged from technical critiques to philosophical musings on automation. Their collective input painted a picture of an evolving landscape where innovation and risk are inextricably linked.

The discussions also revealed a shared commitment to actionable progress. Suggestions for developers to adopt stricter controls, coupled with organizational strategies like ongoing audits, provided a roadmap for safer AI integration. These steps, born from the lessons of this incident, aimed to fortify tools against emerging threats.

Looking back, the resolution of these issues in version 1.3 offered a foundation for growth. For those navigating this space, the next steps involved staying proactive—embracing regular updates to security protocols and fostering collaboration across the industry to anticipate new challenges. By building on these insights, the development community could ensure that AI tools remain both powerful and secure in the years ahead.