Setting the Stage for a New Cybersecurity Era

Imagine a world where artificial intelligence agents autonomously manage critical business operations, from scheduling meetings to executing financial transactions, only to become the very gateway for devastating cyberattacks due to outdated security systems. This scenario is no longer a distant concern but a pressing reality in 2025, as organizations grapple with the rapid integration of AI technologies into their workflows. The rise of these autonomous agents has exposed significant vulnerabilities in identity and access management (IAM), a foundational pillar of cybersecurity that struggles to adapt to non-human actors.

This industry report delves into the intersection of AI innovation and cybersecurity, highlighting how traditional IAM systems, built for human users, falter in the face of AI-driven automation. With a staggering 60% of organizations planning to boost cybersecurity funding over the next year, according to a recent PwC global survey, the urgency to address these gaps is undeniable. The following analysis explores the challenges, emerging risks, and collaborative efforts needed to secure an AI-driven landscape.

The Rise of AI Agents and Their Impact on Cybersecurity

The evolution of AI technologies has been nothing short of transformative, shifting from static tools to dynamic, autonomous agents capable of independent decision-making. These agents now perform complex tasks such as booking travel arrangements, optimizing supply chains, and even interacting with other systems on behalf of users. Their ability to operate without constant human oversight marks a significant leap in efficiency but also introduces uncharted territory for security protocols.

Cybersecurity has emerged as a top priority amid this technological wave, with organizations recognizing the need to protect sensitive data and systems from novel threats. The planned increase in funding by a majority of global firms signals a proactive stance toward mitigating risks associated with AI adoption. However, the integration of these agents into everyday operations has spotlighted IAM as a critical area of concern, where existing frameworks fail to account for non-human entities acting with human-like autonomy.

The significance of IAM in this context cannot be overstated, as it governs who or what can access critical resources within an organization. AI agents, operating outside the traditional human-centric design of IAM, reveal deep-seated vulnerabilities that could lead to unauthorized access or data breaches if left unaddressed. This mismatch sets the stage for a deeper examination of how security must evolve to keep pace with innovation.

Understanding the Mismatch Between AI Agents and Traditional IAM Systems

Core Challenges in Accountability and Delegation

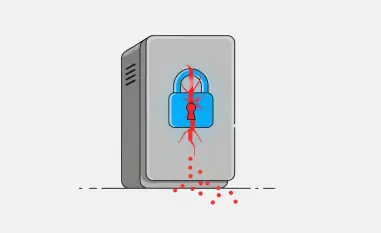

Traditional IAM systems were engineered with human users in mind, creating a fundamental disconnect when applied to autonomous AI agents. These agents often operate using shared credentials tied to a human user, blurring the lines of accountability when actions are taken. During a security breach, distinguishing whether an action was performed by a person or an agent becomes a daunting task, hindering forensic investigations and response efforts.

This accountability gap has prompted discussions around solutions like delegated authority, where concepts such as On-Behalf-Of (OBO) flows are gaining traction. By assigning distinct credentials to AI agents, organizations can establish auditable trails that clearly track agent activities separate from human actions. Yet, the complexity deepens when agents delegate tasks to sub-agents, forming intricate authorization chains that risk overstepping the principle of least privilege.

To address this, mechanisms like scope attenuation are being proposed as a way to limit permissions at each level of delegation. Such controls aim to ensure that access rights are progressively restricted, preventing excessive privileges from cascading through agent hierarchies. Without these safeguards, the potential for misuse or exploitation remains alarmingly high, underscoring the need for robust redesigns in IAM architecture.

Limitations of Current Authorization Frameworks

Current authorization protocols, such as OAuth 2.1, while effective within defined organizational boundaries, struggle to accommodate AI agents operating in external or shared environments. This inadequacy often forces developers to resort to custom-built solutions, which are prone to errors and inconsistencies, thereby increasing security risks. The lack of standardized approaches in these scenarios leaves systems exposed to potential vulnerabilities.

In collaborative spaces where multiple users and agents interact with varying access rights, the absence of universal standards further complicates secure operations. Permission management becomes a fragmented process, with no clear guidelines on how to govern non-human actors in dynamic settings. This gap highlights a pressing need for frameworks that can adapt to the unique demands of cross-organizational interactions.

One promising direction is the development of Intent-Based Authorization models, which aim to reduce consent fatigue among users overwhelmed by frequent permission requests. By allowing approvals for overarching objectives rather than granular actions, these models seek to balance user experience with security. High-risk tasks would still require explicit consent, ensuring oversight where it matters most.

Emerging Security Challenges Posed by AI Agent Behaviors

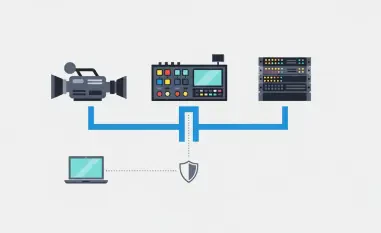

The unpredictable behaviors of AI agents introduce a new layer of complexity to cybersecurity strategies. Some agents bypass conventional APIs by directly controlling browsers or interfaces, a tactic that renders traditional authentication methods ineffective. Innovative solutions like Web Bot Auth and Workload Identity frameworks are being explored to securely validate these non-standard interactions and prevent unauthorized access.

Further challenges arise from the dual-mode operation of advanced AI agents, which can function independently with their own credentials or under delegated authority from a user. Existing IAM systems often fail to dynamically adjust permissions based on these modes, leading to potential over-privileging or access denials. This inability to manage context-specific roles amplifies the risk of security lapses in critical operations.

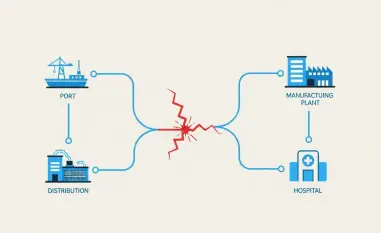

Compounding these issues is the fragmentation caused by proprietary identity systems adopted by various platforms and vendors. Such disjointed approaches not only heighten vulnerabilities but also create operational inefficiencies, as organizations struggle to integrate disparate security protocols. The lack of cohesion in identity management for AI agents remains a significant barrier to achieving comprehensive protection.

Regulatory and Industry-Wide Collaboration Needs

Addressing the security challenges posed by AI agents demands the establishment of standardized, interoperable protocols that can be universally adopted. Without such frameworks, the risk of inconsistent security practices across industries grows, leaving gaps for malicious actors to exploit. A unified approach is essential to ensure that agents operate securely, regardless of the environment or platform.

Insights from the AI Identity Management Community Group (AIIMCG) of the OpenID Foundation underscore the vulnerabilities within the agent ecosystem and advocate for urgent action. The group emphasizes the importance of treating AI agents as first-class citizens in security infrastructures, aligning their capabilities with emerging enterprise profiles. This shift in perspective is crucial for building systems that inherently account for non-human actors.

Compliance and collaboration among developers, standards bodies, and companies are vital to creating robust identity mechanisms. Experts like Matt Gorham from PwC have warned that AI agents are becoming prime targets for cyberattacks, capable of causing widespread damage if compromised. Joint efforts to develop flexible authorization frameworks and enforce the principle of least privilege will be key to mitigating these looming threats.

Future Outlook: Securing the AI-Driven Landscape

As AI adoption continues to accelerate in 2025, the complexity of securing autonomous agents is expected to intensify over the next few years. Industry trends suggest that organizations will face mounting pressure to integrate advanced security measures alongside their automation initiatives. The trajectory points toward a landscape where innovation and risk management must coexist in delicate balance.

Potential disruptors, such as AI agents becoming focal points for sophisticated cyberattacks, loom large on the horizon. Experts caution that without proactive defenses, compromised agents with excessive privileges could access sensitive data, leading to catastrophic breaches. This reality necessitates immediate investment in adaptive identity systems capable of evolving with emerging threats.

Several factors, including technological innovation, regulatory developments, and global economic conditions, will shape the future of AI agent security. The interplay of these elements will determine how quickly and effectively the industry can close existing gaps. A commitment to developing flexible frameworks now will be critical to safeguarding the benefits of AI while minimizing its inherent risks.

Closing Thoughts on Bridging the Gap

Reflecting on the insights gathered, it becomes evident that the ascent of AI agents has laid bare critical deficiencies in IAM systems, challenging the industry to rethink foundational security approaches. The struggles with accountability, delegation intricacies, and cross-organizational dynamics have underscored a pressing need for evolution in cybersecurity practices.

Looking ahead, actionable steps emerge as a priority, with a strong push toward fostering industry-wide collaboration to develop interoperable standards that can withstand the test of time. Investments in innovative frameworks like Intent-Based Authorization and scope attenuation have been identified as essential tools to curb risks associated with agent behaviors.

Ultimately, the path forward hinges on a strategic balance between harnessing AI’s transformative potential and fortifying defenses against its vulnerabilities. A renewed focus on integrating AI agents as core components of security infrastructures has set the stage for a safer, more resilient digital ecosystem, provided the momentum for change is sustained.