Unveiling Shadow AI: A Hidden Danger in the Digital Age

Imagine a scenario where a company’s most sensitive data—trade secrets, customer information, and strategic plans—is unknowingly exposed through a simple chatbot interaction by an employee seeking to streamline a task. This isn’t a far-fetched nightmare but a growing reality driven by the rise of Shadow AI, an emerging cybersecurity concern that keeps Chief Information Security Officers (CISOs) awake at night. With a McKinsey survey revealing that over 75% of firms now integrate AI into at least one business function, the rapid adoption of these technologies signals unprecedented efficiency gains. However, beneath this promise lies a significant risk: unmanaged and unauthorized AI usage that bypasses organizational oversight.

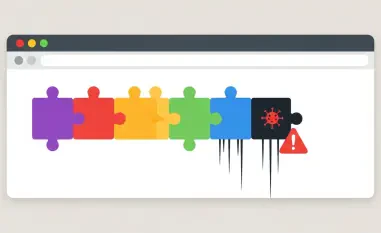

The concept of Shadow AI refers to employees deploying AI tools, such as public large language models (LLMs) or independent software-as-a-service (SaaS) applications, without the knowledge or approval of IT and security teams. While these tools can boost productivity, their unmonitored use creates vulnerabilities that can undermine data security and regulatory compliance. For CISOs, this represents not just a technical challenge but a governance crisis that threatens to erode the very benefits AI offers. Addressing this hidden danger is no longer optional; it’s a critical priority for safeguarding organizational integrity.

This guide aims to equip CISOs and security leaders with actionable insights to identify, manage, and mitigate the risks posed by Shadow AI. By exploring its origins, dissecting its dangers, and providing a step-by-step framework for defense, the following sections lay out a comprehensive approach to transform this threat into an opportunity for stronger security practices. The urgency to act is clear, as unchecked AI usage continues to grow, demanding immediate attention to protect digital assets in an increasingly complex landscape.

The Rise of AI and the Roots of Shadow AI Risk

The proliferation of AI technologies, particularly large language models (LLMs) like ChatGPT and Google’s Gemini, has reshaped the corporate landscape, becoming indispensable for maintaining a competitive edge. Businesses across industries rely on these tools for tasks ranging from data analysis to automated content creation, driving efficiency and innovation at an unprecedented scale. However, this rapid integration has outpaced the development of robust governance frameworks, creating fertile ground for risks to emerge outside the purview of traditional security measures.

Shadow AI, defined as the unauthorized and unmonitored use of AI tools by employees, has emerged as a direct consequence of this unchecked adoption. Often driven by a desire for quick solutions, staff members turn to publicly available AI platforms without considering the security implications, inadvertently exposing sensitive information. According to IBM’s Cost of a Data Breach Report for the current year, 20% of organizations report staff using unsanctioned AI tools, highlighting the scale of this hidden threat. Additionally, a report by RiverSafe notes that one in five UK companies has experienced data exposure due to employee interactions with generative AI, underscoring the real-world consequences.

The roots of this risk lie in the accessibility and ease of use of modern AI tools, which require little technical expertise to deploy, making them widely available to users across various skill levels. Unlike enterprise-grade solutions with built-in safeguards, public AI models often lack transparency regarding data handling, leaving organizations blind to potential breaches. For CISOs, understanding this backdrop is essential to grasp why Shadow AI matters and why it demands a tailored response distinct from traditional cybersecurity challenges, setting the stage for deeper exploration of its specific dangers.

Dissecting the Dangers: How Shadow AI Undermines Security

Stage 1: Data Exposure Through Unvetted AI Tools

One of the most immediate risks of Shadow AI is the potential for data exposure when employees use unvetted, public AI tools for work-related tasks. By inputting confidential information into platforms like consumer-grade chatbots, staff members may unknowingly share proprietary data with systems outside organizational control. Google research indicates that 77% of UK cyber leaders attribute a rise in security incidents to generative AI, pointing to inadvertent data leakage as a primary concern.

Anton Chuvakin, a security advisor at Google Cloud, has emphasized the gravity of this issue, noting that when employees paste sensitive meeting notes or client details into unapproved chatbots for summarization, they risk handing over critical information to systems that may store or misuse it. Without visibility into these interactions, security teams struggle to protect assets they cannot see, let alone control. This lack of oversight turns a seemingly benign productivity hack into a gateway for significant breaches.

Hidden Data Trails: The Risk of Retention and Reuse

Beyond the initial act of data sharing, a deeper concern arises from how public AI models handle inputted information over time. Many of these systems retain user data for training purposes, meaning that a single interaction could lead to prolonged exposure as the data is reused in future model iterations. This hidden data trail amplifies the risk, as sensitive information may resurface in unexpected contexts, accessible to third parties or even malicious actors, long after the original input.

Stage 2: AI Hallucinations Leading to Misinformed Decisions

Another critical danger of Shadow AI lies in the phenomenon of AI hallucinations, where poorly trained or unverified models produce outputs that appear credible but are factually incorrect. When employees rely on such tools for decision-making without validation, the resulting errors can lead to flawed business strategies or operational missteps. This issue is particularly concerning in high-stakes environments where accuracy is paramount.

Unreliable Outputs: The Cost of Bad Data

The reliance on unchecked AI outputs erodes trust in decision-making processes, as organizations cannot guarantee the integrity of the information being used. Bad data from hallucinating models can cascade through systems, leading to costly mistakes or reputational damage. For CISOs, mitigating this risk requires not only technical controls but also cultural shifts to ensure employees understand the limitations of unapproved AI tools and the importance of validation.

Stage 3: Shadow AI vs. Shadow IT – A Unique Challenge

While Shadow AI shares similarities with the longstanding issue of Shadow IT—unauthorized use of cloud apps and services—it presents distinct challenges that traditional solutions cannot fully address. Shadow IT often leaves detectable traces, such as unusual network traffic, which tools like Cloud Access Security Brokers (CASBs) can monitor. However, Shadow AI operates on a different plane, often evading conventional detection methods due to its integration into everyday workflows.

Dan Lohrmann, Field CISO at Presidio, highlights that Shadow AI introduces complexities around licensing, application sprawl, and compliance that go beyond Shadow IT. The rapid pace of AI adoption and the intricate nature of its underlying technology further complicate oversight, making it challenging for organizations to maintain control. Security teams must grapple with additional considerations, such as training needs and workflow integration, which demand a more nuanced approach to governance.

Invisible Interactions: Why AI Evades Traditional Controls

A key differentiator is the invisibility of Shadow AI interactions, which often occur within personal browsers or devices without leaving a corporate footprint. As Chuvakin points out, unlike Shadow IT, where unauthorized tools might trigger alerts through access attempts, Shadow AI blends seamlessly with regular online activity. This lack of traceability makes it nearly impossible to monitor with standard tools, necessitating innovative strategies to regain control over these elusive threats.

Why Banning AI Tools Won’t Solve the Problem

Attempting to ban AI tools as a response to Shadow AI risks is a strategy that many organizations have considered, with high-profile cases like Samsung’s 2023 ban following data leaks serving as a cautionary tale. While the intent behind such prohibitions is to protect sensitive information, these measures often fail to address the root causes of unauthorized usage, which can lead to further complications. In a distributed workforce, enforcing bans becomes a logistical nightmare, as employees can easily access AI models on personal devices outside corporate networks.

Experts like Diana Kelley, CISO at Noma Security, argue that outright bans risk placing companies at a competitive disadvantage by stifling innovation and productivity gains that AI enables. Similarly, Anton Chuvakin describes such measures as mere “security theater,” ineffective in preventing usage and likely to drive it underground. This hidden adoption on less secure platforms only heightens exposure, as security teams lose any chance of visibility or mitigation.

The broader implication is that bans create a false sense of security while ignoring the inevitability of AI integration in modern business. Employees will continue seeking efficiency through these tools, whether sanctioned or not, making prohibition a short-sighted tactic. Instead, the focus should shift toward managing usage through policies and safeguards that acknowledge AI’s value while addressing its risks head-on, a perspective that forms the basis for the strategies outlined next.

Building a Robust Defense: Strategies to Manage Shadow AI

Step 1: Discover and Approve AI Tools Effectively

The first critical step in managing Shadow AI is to gain visibility into the tools employees are using across the enterprise, which is essential for understanding the scope of potential risks and vulnerabilities in the system. This involves identifying which AI applications are in play, who is accessing them, and what data they handle. Specialized vendor solutions can assist in this discovery process, enabling security teams to map out the extent of unauthorized usage and prioritize their response based on risk levels.

Once identified, a structured approval process must be established to distinguish between consumer-grade and enterprise-grade AI tools. Chuvakin recommends approving only those platforms that meet stringent security criteria, ensuring that employees have access to safe alternatives. This proactive approach prevents the need for unsanctioned tools by aligning available options with business needs, reducing the temptation to bypass official channels.

Setting Guardrails: Balancing Access and Security

Establishing clear guardrails is essential to balance accessibility with protection, ensuring that both user convenience and security are prioritized in the process. Criteria for approval should include encryption by default, data residency controls, and assurances that customer data isn’t used for training purposes. Providing an exception process for low-risk use cases, as Chuvakin suggests, further ensures flexibility without compromising safety. These measures, coupled with accessible secure alternatives, help maintain productivity while minimizing vulnerabilities.

Step 2: Implement Tailored Safeguards for AI Usage

For approved AI tools, ongoing safeguards must be tailored to address the unique risks they present. Standard IT security measures like access control remain relevant but need adaptation for AI-specific challenges. Monitoring both inputs and outputs for sensitive data, as Chuvakin advises, becomes a priority to prevent leakage or misuse during interactions with these systems.

Integrating data loss prevention (DLP) systems into AI workflows offers an additional layer of defense by flagging potential issues before data leaves the organization. Diana Kelley emphasizes the importance of runtime monitoring, particularly for AI agents prone to autonomous drift. These technical controls, when consistently applied, create a robust framework to manage usage without stifling innovation, ensuring that security evolves alongside technology.

Continuous Oversight: Adapting to AI’s Unique Risks

Continuous oversight through logging and auditing AI activity is vital for detecting deviations and refining policies over time. Kelley suggests capturing inputs, outputs, and agent interactions for forensic analysis, while baselining access patterns to flag anomalies. Periodic audits of usage and outputs ensure that controls remain effective, allowing organizations to adapt to emerging risks and maintain a proactive stance against potential threats.

Step 3: Foster Employee Awareness and Collaboration

Educating employees about the risks of Shadow AI and the availability of approved alternatives forms a cornerstone of any effective strategy. Training programs should clearly outline what constitutes unauthorized usage, the associated dangers, and the secure tools provided by the organization. This awareness helps staff make informed decisions, reducing the likelihood of accidental exposure through unvetted platforms.

Beyond education, fostering a collaborative culture is equally important. Security teams should position themselves as enablers rather than blockers, encouraging open dialogue about AI needs. Kelley advocates for regular communication of successes and lessons learned from pilot projects, alongside quick-response support for issues. This approach builds trust, ensuring adherence to policies while supporting responsible experimentation.

Two-Way Dialogue: Understanding Employee Needs

A two-way dialogue between staff and security teams allows organizations to understand why employees turn to AI tools and adapt policies accordingly. By addressing underlying motivations—whether for speed, creativity, or problem-solving—guardrails can be tailored to support legitimate use cases. This mutual understanding not only curbs Shadow AI but also drives innovation, aligning security with business objectives in a meaningful way.

Key Takeaways: Navigating the Shadow AI Landscape

- Shadow AI introduces distinct risks, such as data exposure and hallucinations, setting it apart from traditional Shadow IT challenges.

- Banning AI tools proves ineffective and counterproductive, potentially leading to competitive disadvantages and underground usage.

- Effective management strategies include discovering and approving tools, implementing tailored safeguards, and prioritizing employee training.

- Collaboration between security teams and employees is crucial to balance innovation with safety, ensuring policies are both practical and protective.

Looking Ahead: Shadow AI in the Evolving Cyber Threat Landscape

As AI becomes increasingly embedded in business systems, Shadow AI is poised to exacerbate existing cybersecurity threats, creating a ripple effect across the threat landscape. The potential for malicious actors to exploit unmonitored AI interactions—whether through data theft or manipulation—adds a layer of urgency to addressing this issue. CISOs must anticipate how these risks evolve, preparing for scenarios where AI vulnerabilities intersect with other attack vectors like phishing or ransomware.

The need for dynamic governance frameworks is evident, as static policies will struggle to keep pace with rapid technological advancements. Emerging regulatory developments may also shape AI usage, requiring organizations to align with compliance mandates while maintaining operational flexibility. Staying ahead of these trends demands vigilance and adaptability, ensuring that security strategies remain relevant in a shifting environment.

Proactive engagement with industry standards and collaboration with peers can further inform approaches to Shadow AI. As the integration of AI deepens, the focus should extend beyond immediate risks to long-term resilience, building systems that not only mitigate threats but also leverage AI’s potential securely. This forward-looking perspective is essential for navigating the complexities of tomorrow’s digital ecosystem.

Final Thoughts: Turning Shadow AI from Threat to Opportunity

Reflecting on the journey through understanding and addressing Shadow AI, it became evident that this challenge demanded a multifaceted response from CISOs and security teams. The steps taken to uncover its risks, from data exposure to decision-making errors, laid a foundation for robust strategies that encompassed tool discovery, tailored safeguards, and employee collaboration. Each action built toward a stronger defense, transforming a potential vulnerability into a managed aspect of cybersecurity.

Looking back, the realization dawned that outright bans had proven inadequate, often exacerbating the very issues they aimed to solve, and instead, the path forward had been paved with visibility and proactive governance. This approach ensured that AI’s benefits were harnessed without compromising safety. For those continuing this effort, the next steps involved deepening cultural shifts within organizations, advocating for security as an enabler of innovation, and staying attuned to regulatory and technological shifts.

Ultimately, the experience underscored that Shadow AI, once seen as a looming threat, had offered a unique chance to refine security practices. Moving forward, CISOs were encouraged to invest in advanced monitoring solutions, foster ongoing dialogue with staff, and explore partnerships with AI security vendors to stay ahead of risks. By embracing this opportunity, organizations could not only protect their assets but also position themselves as leaders in a digitally secure future.