With a career dedicated to defending multinational corporations from the digital shadows, Malik Haidar has a rare perspective on the patterns that connect disparate cyberattacks. He sees beyond individual incidents to the underlying strategies threat actors reuse and refine. Today, we delve into his analysis of the latest threats, exploring how attackers are exploiting the very tools we trust for work and daily life—from collaboration software and browser extensions to the fundamental components of web development—and what this escalating abuse of trust means for our collective security.

The RondoDox botnet exploited the critical React2Shell flaw in IoT devices for nine months. How do such long-running campaigns persist undetected, and what practical steps should developers using React Server Components take to mitigate this specific remote code execution risk? Please provide some step-by-step details.

It’s unsettling but not surprising that a campaign like RondoDox could run for nine months. These operations persist because they target the blind spots in our digital infrastructure, specifically the vast, unmanaged landscape of IoT devices. Many of these devices are deployed and then forgotten, receiving little to no security oversight or timely patching. When a critical, 10.0 CVSS score vulnerability like React2Shell emerges, it’s a goldmine. Attackers automate their scans and exploit it immediately, while defenders are often struggling just to identify which of their thousands of devices are even vulnerable. With nearly 85,000 instances still susceptible as of early this year, over 66,000 of which are in the U.S. alone, the attack surface is massive and fragmented, making comprehensive detection a nightmare.

For developers, the mitigation strategy has to be proactive and layered. First, immediate dependency management is crucial. You must audit your projects to identify any use of vulnerable versions of React Server Components or Next.js and update them immediately. Don’t wait. Second, implement strict input validation and sanitization on any data that flows into server components. Assume any unauthenticated input is hostile. Third, developers should re-architect where possible to limit the exposure of server components to unauthenticated users. Finally, continuous security scanning within your CI/CD pipeline is non-negotiable. This isn’t just about finding vulnerabilities in your own code, but in the third-party libraries that form its foundation. That’s how you shrink the window of opportunity for attackers.

The Trust Wallet breach stemmed from a supply chain attack where leaked GitHub secrets allowed attackers to bypass the standard release process. What defensive layers can organizations implement to protect API keys and build pipelines from this kind of developer-centric compromise? Share a few specific examples.

The Trust Wallet incident is a textbook example of a modern supply chain attack, where the target isn’t the final product but the process that creates it. It’s a painful reminder that a single leaked secret can unravel the entire security of a release pipeline. The attackers got their hands on GitHub secrets, which gave them the Chrome Web Store API key. With that, they had the keys to the kingdom; they could upload malicious builds directly, completely sidestepping all internal reviews and controls. This kind of access is devastating. The fact that they were preparing since at least December 8, 2025, shows a level of patience and planning that is deeply concerning.

To defend against this, you need a multi-layered, zero-trust approach to your development environment. First, eliminate long-lived, hardcoded secrets. Use a centralized secrets management solution that provides short-lived, dynamically generated credentials for build jobs. Second, enforce the principle of least privilege for API keys. A key used for uploading a build should not have permissions to do anything else, and its scope should be as narrow as possible. Third, mandate multi-person approval for critical actions, especially for production releases. This creates a human checkpoint that can’t be bypassed by a single compromised key or account. Finally, implement rigorous monitoring and anomaly detection on your build systems. You should be alerted if a build is initiated outside of normal hours, from an unusual IP address, or if it bypasses the standard review process.

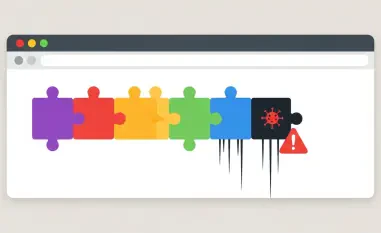

The DarkSpectre group compromised millions through malicious browser extensions, using techniques like steganography. How can users and enterprise security teams better vet browser extensions for hidden threats, and what red flags might indicate an extension is part of a large-scale surveillance operation?

DarkSpectre’s operation is staggering in its scale and sophistication, compromising over 8.8 million users over seven years. They’ve turned browser extensions into a perfect vehicle for persistent surveillance and fraud. Their use of steganography—hiding malicious JavaScript payloads inside harmless-looking PNG images—is particularly insidious. The extension can lie dormant for days before a hidden trigger extracts and executes the code, making it incredibly difficult to detect during a standard security review. It’s a ghost in the machine. This isn’t a single criminal enterprise; it’s a network of interconnected malware clusters like ShadyPanda and GhostPoster, each with its own objective, from affiliate fraud to corporate espionage.

For enterprise teams, the first step is to implement an allowlist for browser extensions. If it’s not on the list, employees can’t install it. This requires a vetting process where every extension is scrutinized for its permissions, developer reputation, and code. Look for red flags: Does a simple note-taking extension really need access to all your browsing data on every website? That’s a huge overreach. Users should be trained to ask that same question. They should also be wary of extensions pushed through malvertising or unofficial stores. The most telling sign of a large-scale operation is the sheer volume of legitimate-looking but ultimately generic extensions. DarkSpectre churned these out steadily, creating a vast portfolio that looked benign on the surface but was built for malicious purposes.

Threat actors are now abusing Microsoft Teams notifications for callback phishing and vishing attacks to deploy malware. Beyond email filters, what novel security awareness training and technical controls can effectively counter these attacks that exploit trust in collaboration platforms? Please provide a few actionable strategies.

This is a brilliant and dangerous evolution of phishing. By using Microsoft Teams, attackers are piggybacking on a trusted notification channel. The message comes from a legitimate Microsoft sender address, so it sails right past email filters and, more importantly, the user’s inherent suspicion. People are conditioned to trust notifications from their primary work tools. When they see a fake invoice or payment notice in a Teams group, their first instinct isn’t skepticism; it’s to react. Being prompted to call a number feels more immediate and legitimate than clicking a suspicious link in an email.

To counter this, security awareness training has to be updated immediately. We must run phishing simulations that use Teams, Slack, and other collaboration tools, not just email. We need to teach employees to be skeptical of any unsolicited message that creates a sense of urgency, regardless of the platform. A key strategy is to train them to never use contact information provided within a suspicious message. Instead, they should independently verify the request through an official, known channel. On the technical side, organizations can implement controls to monitor for anomalous activity within Teams, such as the mass creation of new groups with external users or names containing keywords like “invoice” or “payment.” For the vishing component, where users are tricked into installing tools like Quick Assist, endpoint detection and response (EDR) solutions should be configured to flag and block the execution of remote support tools initiated from suspicious contexts.

We’re seeing that AI-powered phishing has a significantly higher click-through rate, while vendors admit that issues like prompt injection may never be fully solved. How is the security industry adapting to both defend AI systems and counter AI-enhanced attacks? Describe the biggest challenge you see.

The statistics are stark: AI-automated phishing emails are achieving 54% click-through rates, which is 4.5 times more effective than standard attempts. This is a seismic shift. At the same time, even a leader like OpenAI admits that prompt injection, a fundamental way to trick AI models, is unlikely to ever be fully “solved.” This creates a two-front war for the security industry. On one front, we are trying to secure the AI systems themselves, using techniques like adversarial training and system-level safeguards to make them more resilient to manipulation. This is an ongoing, reactive process—we find a new attack, we train the model against it, and the cycle continues.

On the other front, we are scrambling to defend against attacks supercharged by AI. The biggest challenge here is scale and personalization. AI allows threat actors to craft perfectly fluent, context-aware, and highly personalized phishing lures for thousands of targets simultaneously. This nullifies the classic “spot the typo” advice we’ve given for years. The industry is responding by developing AI-powered defenses that can analyze communication context, tone, and relationships to spot anomalies that a human might miss. But it’s an arms race. The fundamental challenge is that attackers are using AI to exploit human psychology, while defenders are using AI to model machine behavior. The human element remains the most unpredictable and vulnerable part of the equation.

The Silver Fox group targeted users in India with tax-themed lures to deploy the modular ValleyRAT trojan. How do attackers tailor these remote access trojans and phishing campaigns to specific regions, and what makes a modular architecture so effective for post-compromise activities?

Attackers like Silver Fox are incredibly savvy marketers. They understand that localization is key to a successful campaign. Using income tax-themed lures in India is a perfect example. It’s timely, relevant, and taps into a common source of anxiety and official communication that people are expecting. This cultural and regional tailoring dramatically increases the likelihood that a victim will open a malicious PDF or click a link. They’re not just translating words; they’re translating intent and exploiting local contexts. We saw with the tracking panel that while many clicks originated from places like China and the U.S., the campaign itself was specifically crafted for an Indian audience.

The modular architecture of a trojan like ValleyRAT, which is a variant of the infamous Gh0st RAT, is what makes it so dangerous after the initial compromise. Instead of deploying a single, large piece of malware packed with every possible malicious feature, they start with a small, lightweight core implant. This initial payload is designed to do one thing well: establish a foothold and call back to its control server. Once that connection is made, the attackers can deploy additional “plugins” or modules as needed. If they want to log keystrokes, they send the keylogger module. If they need to steal credentials, they send that specific tool. This approach makes the malware harder to detect, as the initial file is less suspicious, and it gives the attackers immense flexibility to adapt their attack based on what they find on the compromised system.

What is your forecast for how attackers will abuse user trust in everyday digital tools?

My forecast is that the line between legitimate and malicious will become almost imperceptibly thin, driven by the abuse of trusted platforms and enhanced by AI. We’re moving away from the era of obviously fake login pages and poorly worded emails. The future of attacks lies in the platforms we use without a second thought: collaboration tools like Teams, essential developer components like React, and ubiquitous browser extensions. Attackers will no longer need to break down the front door when we are willing to invite them in, disguised as a helpful plugin or an urgent notification from IT. They will leverage AI not just to create flawless phishing lures, but to automate social engineering within these trusted ecosystems, impersonating colleagues or managers with terrifying accuracy. The core battleground for security is shifting from the network perimeter to the user’s perception of reality, and the greatest vulnerability will be our instinct to trust the tools we depend on every day.