With years of experience defending multinational corporations from digital threats, Malik Haidar has a unique vantage point on the intersection of business strategy and cybersecurity. We sat down with him to dissect the recent Marquis Software Solutions breach, a case that serves as a powerful lesson in modern cyber risk. Our discussion explored the dangerous ripple effect of a single vendor compromise, the critical importance of foundational security measures, the anatomy of a typical network intrusion, and the high-stakes, often gut-wrenching decisions that follow a major incident.

The Marquis breach impacted at least 74 financial institutions through a single vendor, which an expert called a “blast radius.” Can you explain how this third-party concentration risk works in fintech and describe a few key controls a bank should verify before trusting a vendor with sensitive data?

That “blast radius” is the perfect term for it. In today’s financial ecosystem, it’s incredibly efficient for banks to outsource specialized functions to fintech providers like Marquis. But that efficiency creates a massive single point of failure. You have one vendor sitting in the data flow for hundreds of banks, and when that vendor gets hit, the shockwave travels instantly across the entire system. It’s no longer just one company’s problem; it’s a systemic event affecting over 780,000 people. Before a bank even considers a partnership, they must move beyond trust to verification. This means demanding evidence, not just promises. I’d want to see recent, independent penetration test results, audit reports confirming that multi-factor authentication is enforced on all critical systems, and clear, contractual SLAs for patching critical vulnerabilities. It’s about treating your vendor’s security as an extension of your own.

An expert noted Marquis’s remediation list—patching firewalls and enabling MFA—was “basic security hygiene.” What does this list suggest about their prior security posture, and could you walk me through the top three controls from that list that would have made the biggest difference in this attack?

When I see a remediation list like that after a breach, it’s a huge red flag. It tells me their security posture was likely more reactive than proactive. These aren’t advanced, sophisticated defenses; they’re the foundational blocking and tackling of cybersecurity. It’s like finding out a bank built a vault but forgot to lock the front door. If I had to pick the top three controls that would have changed the outcome, number one is enabling MFA on all firewall and VPN accounts. That alone could have stopped the attackers cold, even if they had a valid password. Second, ensuring all firewall devices are fully patched. The entire intrusion started by exploiting a known vulnerability; timely patching would have closed that door completely. And third, deleting unused accounts. These dormant accounts are a goldmine for attackers, allowing them to move silently through a network. Removing them drastically shrinks the internal attack surface and makes it much harder for an intruder to escalate their privileges.

The breach involved a SonicWall vulnerability, a tool often exploited by groups like Akira ransomware. Could you detail the typical attack chain, from exploiting a firewall to data exfiltration, and explain why threat actors often steal data even if their primary goal is encryption for a ransom?

It’s a classic playbook we see time and time again. The attack begins with automated scanners scouring the internet for unpatched, vulnerable devices like this SonicWall firewall. Once they find one, they use a public exploit to gain that initial foothold on the network perimeter. From there, it’s a land-and-expand operation. They move laterally, often using those old, forgotten accounts, searching for sensitive data stores. The goal is to find the crown jewels—in this case, files full of names, Social Security numbers, and bank details. The reason they steal the data before encrypting anything is leverage. It’s a double extortion tactic. The first threat is, “Pay us to get your systems back online.” The second, more sinister threat is, “Pay us, or we will leak all of this highly sensitive customer data to the world.” This puts immense pressure on the victim because now they aren’t just dealing with operational downtime; they’re facing catastrophic reputational damage, regulatory fines, and class-action lawsuits.

The intrusion happened in mid-August, but the investigation concluded in late October. Based on your experience, what specific forensic and legal challenges can stretch an incident response timeline like this, and what are the critical first steps a company must take within the initial 48 hours of detection?

That two-month timeline from intrusion to conclusion doesn’t surprise me at all. The first 48 hours are about containment—what we call “stopping the bleeding.” You have to isolate the affected systems to prevent the attackers from moving further, just as Marquis reportedly did. But after that, the painstaking forensic work begins. Investigators have to meticulously sift through terabytes of log data and system images to reconstruct the entire attack, identifying precisely which files were accessed or copied. This is a slow, methodical process. Layered on top of that is the legal nightmare. With at least 74 banks involved, you’re navigating a complex web of breach notification laws across multiple states, each with different requirements and deadlines. Coordinating the response and legal notifications for dozens of different business customers is a massive logistical challenge that can easily stretch an investigation out for weeks.

A filing suggested a ransom was paid to stop a data leak, though this is unconfirmed. What are the key business and ethical factors a board must weigh when debating a ransom payment, and can you provide an example of how that decision could backfire even if the payment is made?

It is one of the most agonizing decisions a board will ever have to make. From a purely pragmatic business standpoint, paying the ransom can feel like the fastest path to restoring operations and, more importantly, preventing a data leak that could destroy decades of customer trust. The pressure from a business continuity perspective is immense. Ethically, however, you’re funding a criminal enterprise, encouraging future attacks. The biggest risk is that payment is no guarantee of a positive outcome. You are negotiating with criminals who have no honor. I’ve seen cases where a company pays the ransom, and the attackers leak the data anyway. In other instances, the decryption key they provide is faulty and corrupts the data, or they return months later demanding a second payment because they know the company is willing to pay. Paying the ransom essentially paints a target on your back for every other threat actor out there.

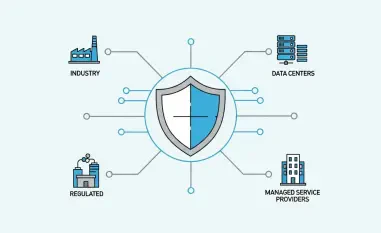

What is your forecast for third-party risk in the financial sector?

I believe this problem will get worse before it gets better. The financial industry’s dependency on a concentrated number of specialized fintech vendors is only accelerating. The efficiency gains are just too compelling to ignore. Because of this, I forecast that regulators will be forced to step in with much more stringent and prescriptive third-party risk management rules. We’re going to move away from the current model of annual questionnaires and box-checking. Instead, regulators will likely start mandating that banks conduct continuous, evidence-based security assessments of their critical vendors. The “blast radius” from incidents like the Marquis breach is simply too large and too systemic for the industry to self-regulate effectively.