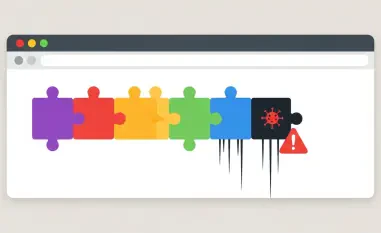

In an era where artificial intelligence is advancing at a pace that outstrips even the boldest predictions of technological growth, a pressing question emerges: are enterprises falling behind in securing these powerful tools against emerging threats, especially as the stakes grow higher? Much like the internet boom of the late 1990s, when the Browser Wars between Netscape and Microsoft prioritized speed over safety, today’s AI revolution is outpacing the ability of IT teams to protect against sophisticated risks. The rapid evolution from GPT-3.5 to GPT-5 in just 30 months exemplifies this breakneck speed, leaving traditional security measures struggling to adapt. As AI systems gain the ability to impersonate, manipulate, and attack at scale, the stakes have never been higher. Enterprises face a critical juncture—modernize detection strategies immediately or risk devastating cyberattacks that move as fast as AI itself. This alarming parallel to past technological races demands a closer look at whether history’s security oversights are doomed to repeat in this new digital battleground.

1. Historical Echoes of Rapid Tech Growth

The staggering speed of AI development today mirrors the frenzied internet “gold rush” of the late 1990s, a period when innovation often came at the expense of user protection. During the original Browser Wars, giants like Microsoft and Netscape raced to dominate the market, with Microsoft’s Internet Explorer 6 in 2001 becoming infamous not just for its functionality but for its glaring security flaws. That era taught a harsh lesson: prioritizing speed over safety can lead to long-term vulnerabilities that haunt users for years. Now, AI’s growth outstrips even Moore’s Law, the decades-old benchmark that computing power doubles roughly every two years. This unprecedented acceleration raises concerns about whether enterprises are prepared to handle the fallout of unchecked innovation. As AI tools become integral to business operations, the echoes of past mistakes grow louder, urging a reevaluation of how security is integrated into this rapidly evolving landscape.

Today’s AI advancements, exemplified by ChatGPT’s leap through versions in record time, highlight a gap between technological capability and enterprise readiness. The ability of AI to perform complex tasks, from generating content to automating processes, is matched by its potential for misuse in ways that were unimaginable during the first internet boom. Unlike the Browser Wars, where flaws primarily affected individual users, AI-driven threats can target entire systems, impersonating trusted entities or launching coordinated attacks at scale. IT teams, often bound by outdated frameworks, struggle to keep pace with these dynamic risks. The historical parallel serves as a stark warning: without proactive measures, the rush to adopt AI could replicate the security disasters of the past, but with far graver consequences. Addressing this challenge requires acknowledging the scale of the problem and learning from history to prioritize robust defenses alongside innovation.

2. Shortcomings of Traditional Cybersecurity Tools

Traditional cybersecurity measures, designed to combat known threats through static methods, are proving inadequate against the adaptive nature of AI-driven attacks. These legacy tools rely on recognizing familiar patterns or signatures of malware, a strategy that falters when faced with AI’s ability to evolve tactics in real time. As attackers leverage AI to craft ever-changing threats, enterprises find their defenses too slow to respond effectively. This mismatch exposes organizations to a flood of dangers, from highly targeted phishing schemes to zero-day exploits that take advantage of unpatched vulnerabilities. The inability of old systems to anticipate or adapt to these novel threats underscores a critical weakness in current security postures. Without a shift to more dynamic solutions, businesses remain at the mercy of adversaries who can outmaneuver conventional safeguards with alarming ease.

Among the myriad risks posed by AI, deepfake technology stands out as a particularly urgent concern due to its capacity to deceive on a massive scale. By cloning voices, faces, and even communication styles, generative AI can create content so convincing that it fools not only IT teams but also high-ranking officials. A chilling example occurred in July when cybercriminals used AI to impersonate U.S. Secretary of State Marco Rubio, distributing fabricated text, Signal messages, and voicemails that misled both domestic and international figures. Industry reports indicate a staggering 148% surge in AI impersonation scams over the past year, signaling an escalating threat to enterprise integrity. This incident illustrates how far deepfake capabilities have advanced and how swiftly they are being weaponized. As such deceptions become more sophisticated, the need for updated security protocols that can detect and mitigate these hyper-realistic threats becomes increasingly pressing.

3. Exploiting AI’s Flexibility as a Weapon

Attackers are harnessing AI’s inherent adaptability to create weapons that outpace traditional enterprise defenses with alarming efficiency. Security researchers have developed open-source tools like Bishop Fox’s Broken Hill and techniques such as Confused Pilot to expose vulnerabilities in AI systems, but these same resources are being exploited by malicious actors. Hackers use them to bypass built-in guardrails, enabling the creation of autonomous cyberweapons that can repeatedly succeed against AI models. This exploitation of flexibility turns a strength of AI into a profound liability, as adversaries craft attacks that evolve faster than most security measures can respond. The gap between offensive innovation and defensive capability widens daily, leaving enterprises vulnerable to breaches that exploit the very technology they rely on for progress.

Legacy cybersecurity practices, particularly signature-based tools, are ill-equipped to handle the dynamic nature of AI-driven threats like self-rewriting malware. These tools depend on recognizing previously encountered threats, rendering them ineffective against attacks that continuously morph to evade detection. Even platforms designed with security in mind are not immune, as attackers find inventive ways to push AI models beyond their intended boundaries. This persistent creativity among threat actors reveals a fundamental flaw in relying on static or reactive defenses. The rapid evolution of attack methods demands a corresponding evolution in protective strategies, one that anticipates rather than merely reacts to new forms of exploitation. Without such a shift, enterprises risk being perpetually one step behind in a landscape where AI’s potential for harm grows alongside its potential for good.

4. Building Defenses with AI-Native Strategies

AI’s power as a tool for attack can also be turned into a formidable asset for defense, with IT security teams adopting innovative strategies to counter threats in real time. One approach involves deploying AI-driven User and Entity Behavior Analytics (UEBA), which monitors the typical patterns of users, devices, applications, and AI models. When anomalies—such as an unexpected midnight login from an unfamiliar location or a bot accessing data at unusual speeds—occur, the system flags them instantly. Unlike traditional tools that hunt for known malware signatures, UEBA excels at identifying zero-day exploits and polymorphic malware that change too quickly for older methods. Additionally, continuous red-teaming of AI chatbots and retrieval-augmented generation (RAG) systems, through human or automated simulations of hacker tactics, uncovers vulnerabilities before adversaries can exploit them. These proactive measures represent a significant leap forward in securing AI environments.

Further strengthening defenses, enterprises are leveraging decoy large language models (LLMs) and RAG endpoints, often called honeypot models, to trap attackers. These decoys mimic real systems without exposing sensitive data, allowing IT teams to gather intelligence on adversarial methods while keeping production systems safe. Another critical strategy includes enforcing mandatory guardrail testing before AI deployment, ensuring agents operate in pre-assessed environments with strict access controls and data tagging. This mirrors penetration testing for software and aligns with best practices from NIST’s AI Risk Management Framework. Finally, ongoing monitoring of deployed models using Chain of Thought (COT) techniques and anomaly detection tools helps catch data drift early, preventing subtle shifts from becoming major breaches. Together, these AI-native approaches offer a robust framework to combat the sophisticated threats emerging in this fast-evolving technological landscape.

5. Gaining an Edge with Speed and Trust

Industries under heavy regulation, such as insurance and financial services, face heightened risks from AI-driven attacks while contending with demands for rapid, accurate responses from both regulators and customers. These sectors are prime targets due to the sensitive nature of their data and the high stakes involved in any breach. Modern threat detection strategies powered by AI can help mitigate these risks by identifying and neutralizing threats before they escalate. By containing potential disruptions, security teams not only protect critical operations but also build public trust—an invaluable asset in competitive markets. The ability to respond swiftly and effectively to emerging dangers positions organizations as leaders in their fields, turning security into a tangible business advantage rather than a mere compliance requirement.

As traditional detection tools crumble under the speed and complexity of AI-powered attacks, a fundamental overhaul of security practices becomes essential. Enterprises cannot afford to repeat the mistakes of the early internet era, when browser vulnerabilities led to widespread exploitation. Those who embed security into every phase of AI development will set the standard for resilience and reliability in their industries. Conversely, organizations that fail to adapt risk being left behind, forced to react to breaches at a pace dictated by AI’s relentless advancement. The competitive landscape now hinges on adaptability, where speed in detecting and responding to threats, coupled with trust earned through robust protection, defines success. Prioritizing these elements ensures that businesses not only survive but thrive amid the challenges posed by this new technological frontier.

6. Reflecting on Past Lessons for Future Safety

Looking back, the security lapses during the early days of the internet, particularly with tools like Internet Explorer 6 during the original Browser Wars, served as costly reminders of what happens when innovation outpaces protection. Those oversights led to widespread vulnerabilities that persisted for years, impacting users and businesses alike. In the rush to dominate the market, safety was often sidelined, a mistake that must not be replicated in the current AI-driven era. The parallels between then and now underscore a critical need for vigilance, as the consequences of neglecting security today could dwarf those of the past due to AI’s potential for widespread harm. Reflecting on these historical missteps provides a clear directive: proactive defense is non-negotiable in preventing catastrophic outcomes.

Moving forward, enterprises must commit to modernizing their security frameworks to match the speed of AI advancements, ensuring they avoid the pitfalls of yesteryear. Implementing AI-native strategies and embedding robust protections from the outset are imperative to safeguard against cyberattacks that operate at unprecedented velocities. Collaboration with industry standards, like those outlined by NIST, offers a roadmap for building resilient systems capable of withstanding evolving threats. By learning from earlier errors and prioritizing security as a core component of technological adoption, organizations can establish themselves as pioneers in a safer digital landscape. The path ahead demands continuous innovation in defense mechanisms, ensuring that the lessons of history guide efforts to protect against the unique challenges posed by AI’s rapid rise.