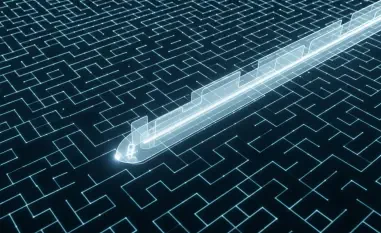

The very code that promises to build the future is now being written with the help of automated assistants that can inadvertently open doors for sophisticated digital adversaries, transforming the software supply chain into the modern era’s most critical battleground. This evolution marks a pivotal shift in cybersecurity, where the greatest threats no longer hammer at the gates but are invited inside, hidden within the trusted tools and components developers use every day. The journey from targeted, nation-state attacks to automated, AI-generated vulnerabilities reveals a landscape where the speed of innovation has become both a primary asset and a profound liability, forcing a necessary and urgent reckoning with the hidden risks embedded deep within digital infrastructure.

The Double-Edged Sword of Modern Software Development

At the heart of today’s technological progress lies a paradox born from Silicon Valley’s foundational ethos: “move fast and break things.” This philosophy, which champions rapid iteration and market dominance, has undeniably fueled unprecedented innovation. However, it has also cultivated a development culture where security is often an afterthought, a hurdle to be cleared rather than a foundational principle. In the relentless pursuit of speed, engineering teams have come to rely on a vast ecosystem of third-party components, from open-source libraries to cloud services, to accelerate timelines. This reliance has created intricate and often opaque software supply chains where a single compromised element can trigger a catastrophic chain reaction, turning a culture of speed into a source of systemic risk.

This complex web of dependencies means the tools developers embrace as productivity enhancers are increasingly dual-use. An open-source library that solves a common problem can also contain a hidden flaw, and a generative AI coding assistant that writes boilerplate code in seconds can also introduce subtle but critical vulnerabilities. These helpers have become the new frontier for attackers, who recognize that it is far more efficient to poison the well than to attack thousands of individual targets. As a result, the very architecture of modern software development, designed for efficiency and collaboration, has inadvertently created a permanent backdoor for those with malicious intent. The helper in the code is now a potential hacker’s doorway.

Tipping Points in Supply Chain Security

For years, the risk lurking within the software supply chain was a known but often deprioritized issue, a theoretical concern overshadowed by more immediate threats like ransomware and phishing. While attempts to compromise foundational software are not new—a notable effort to insert a backdoor into the Linux kernel was caught back in 2003—it took a series of high-profile crises to elevate the threat to a boardroom-level concern. These events served as a brutal wake-up call, demonstrating in stark terms how vulnerable even the most well-defended organizations were to attacks originating from their trusted suppliers.

The first seismic shock was the 2020 SolarWinds breach, a masterclass in supply chain compromise executed by a Russian nation-state actor. Attackers infiltrated the build process for SolarWinds’ Orion Platform, a widely used IT management tool, and injected malicious code into a routine software update. This Trojan horse was then delivered to approximately 18,000 customers, including numerous U.S. federal agencies and Fortune 500 companies. The attack brilliantly circumvented perimeter defenses, creating a secure, trusted tunnel deep inside target networks. SolarWinds became the definitive case study, proving that an organization’s security posture is only as strong as its least secure vendor.

Barely a year later, the Log4Shell vulnerability delivered a different kind of shock. It was not a targeted, malicious implant but a critical flaw discovered in Log4j, a ubiquitous open-source logging library used in countless Java applications. The vulnerability was easy to exploit and existed in hundreds of millions of devices, from enterprise servers to industrial control systems. The disclosure triggered a global fire drill as organizations scrambled to identify where the vulnerable library was embedded within their vast software ecosystems. The Log4Shell incident highlighted the danger of widespread dependency on a single component and exposed the critical lack of visibility many organizations have into their own software, particularly in operational technology (OT) environments where patching legacy systems is often a monumental challenge.

The AI Frontier and its Novel Attack Vectors

As the industry grapples with the lessons of SolarWinds and Log4Shell, the next evolution of supply chain risk is already unfolding on the AI frontier. Generative AI and code assistants have been adopted at a breathtaking pace, fundamentally changing how software is written. A recent GitHub study found that 97% of developers have used AI tools in the past year, embracing a practice sometimes called “vibe coding,” where they rely on AI to generate significant portions of their applications based on high-level prompts. While these tools dramatically boost productivity, their overzealous adoption is introducing a new and insidious class of vulnerabilities.

This new risk stems from a phenomenon known as AI “hallucination,” where a model confidently generates plausible but factually incorrect information. In the context of code generation, this manifests as AI assistants recommending software packages or libraries that do not actually exist. This is not merely a functional bug; it is a security flaw that threat actors have learned to weaponize. By creating a gap between a developer’s expectation and reality, AI hallucinations create a perfect opportunity for malicious intervention, turning a trusted automated assistant into an unwitting accomplice.

This vulnerability has given rise to an attack technique dubbed “slopsquatting.” Malicious actors proactively use AI models to discover these hallucinated, non-existent package names. They then register malicious software under those exact names in public repositories like the Python Package Index (PyPI) or the npm registry. An unsuspecting developer, trusting the output of their AI assistant, searches for the recommended package, finds the attacker’s malicious version, and integrates it directly into their codebase. This method is exceptionally deceptive because it exploits the developer’s trust in the AI tool, bypassing traditional security warnings and reviews.

Quantifying the New AI-Driven Threat Landscape

The threat posed by AI hallucinations is not theoretical. Academic research has begun to quantify the scale of the problem, revealing its systemic nature. A study analyzing 16 leading code-generation tools found that a staggering 19% of all recommended software packages did not exist. Furthermore, the research showed that 43% of these hallucinated packages were recommended repeatedly across different prompts and sessions, significantly increasing the probability that a developer would encounter one and attempt to use it. These statistics paint a concerning picture of an emerging attack surface that is vast, automated, and growing with every new AI model released.

This research is corroborated by real-world incidents. A notable case study is the malicious package named “ccxt-mexc-futures,” which was discovered on PyPI. Created using the slopsquatting technique, the package was designed to impersonate a legitimate library for cryptocurrency trading. After being recommended by an AI tool, it was downloaded over 1,000 times before it was identified and removed. The malware within was crafted to modify operations related to cryptocurrency futures, likely to steal funds or manipulate trades. While this incident was relatively contained, it serves as a powerful proof-of-concept for how slopsquatting could be used to launch far more devastating attacks, potentially initiating a self-replicating worm or compromising sensitive enterprise systems on a massive scale.

A Unified Strategy for Proactive Defense

The common thread weaving through the SolarWinds breach, the Log4Shell crisis, and the rise of AI-driven slopsquatting is a persistent and critical lack of visibility into the software supply chain. To counter these evolving threats, organizations must move beyond a reactive security posture and adopt a unified, proactive strategy that integrates development and security workflows. This requires embracing the “shift left” philosophy from DevOps, which advocates for addressing quality and security issues early in the development lifecycle, and combining it with the cybersecurity principle of operating “left of boom”—preempting an attack before it can detonate.

Achieving this proactive stance depends on several foundational tools and practices. A comprehensive and continuously updated asset inventory is the first step, providing a clear picture of an organization’s software landscape and the business impact of each application. Building upon this, Software Bills of Materials (SBOMs) have become essential for providing granular visibility into every component, library, and dependency within a piece of software. An SBOM acts as a detailed ingredient list, allowing teams to quickly identify if they are affected by a newly discovered vulnerability. This visibility must be complemented by Static and Dynamic Application Security Testing (SAST and DAST) to identify flaws in both proprietary and third-party code before it reaches production.

Ultimately, the fight against automated threats may require leveraging AI itself as a defensive tool. Machine learning algorithms excel at pattern recognition and can be trained to monitor for indicators of attack (IOAs)—subtle deviations from normal system behavior that often signal an ongoing compromise. By analyzing network traffic, user activity, and application logs, defensive AI can identify anomalies that would be invisible to human analysts, providing early warnings of a supply chain attack in progress. For developers, this means embedding security directly into their AI prompts, explicitly asking for code that incorporates safeguards like multi-factor authentication lockouts or robust input sanitization. However, technology alone is not a panacea. The most critical defense remains the human firewall: mandatory, rigorous code review and application security testing are no longer optional but essential safeguards to validate any code—especially that which is AI-generated—before it is trusted in a production environment.

The path from the state-sponsored infiltration of SolarWinds to the automated deception of AI slopsquatting illustrated a rapid and consequential evolution in digital risk. As the tools for building software became more powerful and interconnected, so too did the vectors for compromising it. The industry learned that its greatest innovations could also harbor its most profound weaknesses. In the end, confronting this new reality required a return to fundamentals. While the threats grew more sophisticated, the most effective solutions centered not on a single technology but on a strategic fusion of proactive visibility, integrated security practices, and the indispensable role of human vigilance and expert oversight.