The rapid integration of artificial intelligence into software development environments has created a new frontier of efficiency, but it has also introduced a novel and alarming class of security risks that are only now coming to light. Security researchers have recently uncovered a widespread set of more than 30 serious vulnerabilities affecting a vast array of popular AI-powered coding assistants and Integrated Development Environment (IDE) extensions. This new vulnerability class, dubbed “IDEsaster” by its discoverer, Ari Marzouk, demonstrates how AI agents can be manipulated through sophisticated prompt injection techniques to misuse legitimate IDE features, leading to severe consequences such as data theft, unauthorized configuration changes, and even remote code execution (RCE). The research revealed that a staggering 100% of the tested AI IDEs were susceptible, impacting tools like GitHub Copilot, Cursor, Gemini CLI, and Claude Code. So far, 24 CVEs have been assigned, signaling a systemic issue that challenges the foundational trust developers place in these increasingly indispensable tools.

1. The Anatomy of an IDEsaster Attack

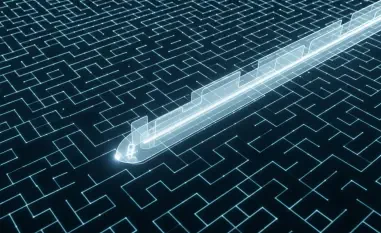

The IDEsaster attack chain is particularly insidious because it does not rely on exploiting software bugs but instead weaponizes the intended functionality of the development environment. The exploit hinges on a potent combination of three key components: prompt injection, auto-approved AI actions, and legitimate IDE features. It begins when an attacker crafts hidden, malicious instructions and embeds them within seemingly harmless content, such as a file, a URL, or a block of text that a developer might innocently process. The AI model, unable to differentiate these instructions from legitimate data, is tricked into executing them. This is compounded by the fact that many AI agents are configured with broad permissions to read or modify files without explicit, case-by-case user approval, creating a pathway for automated compromise. Finally, the manipulated AI agent leverages standard IDE capabilities—such as parsing JSON configuration files or managing workspace settings—to carry out the attacker’s commands. This turns helpful development features into potent vectors for data exfiltration or arbitrary code execution, transforming the IDE from a productivity tool into a security liability.

The real-world implications of these vulnerabilities are severe, as researchers demonstrated several high-impact attack scenarios that can be executed without any user interaction beyond the initial processing of the malicious prompt. One major flaw involves the manipulation of Remote JSON Schemas (e.g., CVE-2025-49150, CVE-2025-53097), where an attacker can force the IDE to fetch a remote schema that contains sensitive data, which is then immediately sent to an attacker-controlled domain. Another critical vulnerability is the IDE Settings Overwrite (e.g., CVE-2025-53773, CVE-2025-54130), where a malicious prompt can covertly edit IDE configuration files like .vscode/settings.json or .idea/workspace.xml, instructing the IDE to execute a malicious file upon certain triggers. Furthermore, attacks on Multi-Root Workspace Settings (e.g., CVE-2025-64660) allow adversaries to alter workspace configurations to automatically load and run malicious code. In every case, the entire process unfolds silently in the background, without requiring the user to reopen or refresh the project, making detection extremely difficult.

2. A New Paradigm for Development Security

The core issue that enables these attacks is the fundamental limitation of Large Language Models (LLMs), which cannot reliably distinguish between user-provided content and embedded, hidden instructions. A single poisoned file name, a carefully crafted diff output, or a manipulated URL pasted into the IDE is enough to deceive the model and initiate a malicious sequence of actions. This vulnerability extends beyond the individual developer’s machine and poses a significant threat to the entire software supply chain. As Aikido researcher Rein Daelman warned, any repository that utilizes AI for tasks such as issue triage, pull request labeling, code suggestions, or automated replies is at risk. Such systems could fall victim to prompt injection, command injection, secret exfiltration, and ultimately, a full repository compromise that could inject malicious code into upstream dependencies, affecting countless downstream users. The ease with which these AI systems can be manipulated underscores a critical gap in current security practices, which have not yet adapted to the unique challenges posed by AI-driven automation in development workflows.

The discovery of IDEsaster necessitated a fundamental shift in how the industry approaches security in the age of AI. Researchers stressed that the community must adopt a new mindset termed “Secure for AI,” which moves beyond simply securing the AI models themselves. This paradigm required developers and security engineers to proactively anticipate how AI-driven features could be abused in the future, not just how they were designed to function today. For developers using AI IDEs, this meant exercising greater caution by only working with trusted projects and repositories, continuously monitoring Model Context Protocol (MCP) servers, and always enabling human-in-the-loop verification whenever possible. For the creators of these tools, the path forward involved a much stricter implementation of security principles. Experts urged them to apply the principle of least privilege to LLM tools, continuously audit IDE features for potential attack vectors, harden system prompts, sandbox command executions, and implement egress controls to prevent unauthorized data transfer. The push to embed security into the very design of these powerful tools had never been more urgent.