In the ever-evolving landscape of cybersecurity, staying ahead of vulnerabilities in widely used tools is crucial. Today, we’re speaking with Malik Haidar, a seasoned cybersecurity expert with a deep background in protecting multinational corporations from digital threats. With his extensive experience in analytics, intelligence, and security, Malik has a unique perspective on integrating business needs with robust cybersecurity strategies. In this interview, we dive into a recently discovered data exposure vulnerability in Keras, a popular deep learning tool. We’ll explore how this flaw impacts users, the risks it poses, real-world exploitation scenarios, and the steps taken to mitigate it, all while uncovering broader lessons for securing machine learning frameworks.

Can you start by explaining what Keras is and why it’s so important in the field of deep learning?

Absolutely. Keras is an open-source library that provides a user-friendly Python interface for building artificial neural networks. It’s widely used in deep learning because it simplifies the process of creating and training AI models. What makes it especially powerful is its ability to work across multiple frameworks like TensorFlow, JAX, and PyTorch, acting as a sort of universal language for developers. You’ll see it in applications ranging from image recognition and natural language processing to autonomous systems and medical diagnostics—basically, anywhere complex data patterns need to be analyzed.

What types of projects or industries typically rely on Keras for their AI solutions?

Keras is a go-to for a wide range of industries. In tech, companies use it for things like recommendation systems or chatbots. In healthcare, it’s often behind models that analyze medical imaging or predict patient outcomes. You’ve also got automotive sectors using it for self-driving car algorithms, and even finance, where it powers fraud detection or market prediction tools. Its accessibility makes it popular among startups, academics, and large enterprises alike, since it lowers the barrier to entry for building sophisticated models.

Can you break down the specific vulnerability recently found in Keras and why it’s a concern?

Sure. This vulnerability, tracked as CVE-2025-12058, stems from how Keras handles its StringLookup and IndexLookup preprocessing layers. These layers are meant to define vocabularies—think of them as dictionaries for mapping data—but the flaw allowed file paths or URLs to be used as inputs without proper checks. During deserialization, when a model is loaded, Keras would access these files or URLs and incorporate their contents into the model. This opens the door to attackers accessing local files or making unauthorized network requests, bypassing even security features like safe mode. It’s a significant concern because it can expose sensitive data or enable broader attacks.

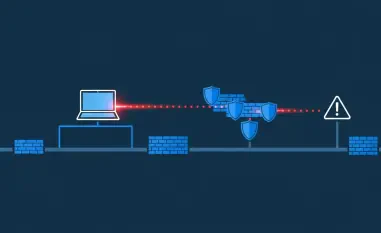

How does this flaw specifically tie into server-side request forgery, or SSRF, attacks?

SSRF happens when an attacker tricks a server into making requests to unintended locations, like internal systems or external URLs. In this Keras vulnerability, since the library processes URLs provided in the vocabulary inputs during deserialization, an attacker can craft a malicious model that forces the system to reach out to a server they control. This could be used to probe internal networks, retrieve sensitive data, or even trigger other exploits. It’s particularly dangerous in environments like cloud systems, where internal metadata services might be accessible through such requests.

Can you paint a picture of how an attacker might exploit this vulnerability in a real-world scenario?

Imagine an attacker uploads a malicious Keras model to a public repository or shares it through a seemingly legitimate project. This model has vocabulary parameters crafted to point to sensitive files on a user’s system, like SSH private keys. When an unsuspecting developer or system loads this model, Keras reads those files into the model’s state during deserialization. The attacker can then retrieve this data, either by accessing the model later or through other exfiltration methods. With SSH keys in hand, they could gain access to servers, code repositories, or cloud infrastructure, potentially leading to a full-blown breach.

What kind of impact could this have on an organization, especially in a cloud environment?

The impact can be devastating. In a cloud environment, if a malicious model is loaded on a virtual machine, it could access instance metadata services to steal IAM credentials. That’s essentially handing over the keys to an organization’s entire cloud infrastructure—attackers could spin up resources, delete data, or plant backdoors. Beyond that, compromised SSH keys could allow access to private code repositories, enabling attackers to inject malicious code into CI/CD pipelines or production systems. It’s a domino effect that can lead to data theft, operational disruption, or even ransomware scenarios.

How was this vulnerability addressed in the latest Keras update, and what does that fix mean for users?

In Keras version 3.11.4, the developers tackled this issue head-on by changing how vocabularies are handled. Now, vocabulary files are embedded directly into the Keras archive and loaded from there during initialization, rather than accessing arbitrary external files or URLs. Additionally, when safe mode is enabled, the system blocks the loading of untrusted vocabulary files altogether. For users, this means a much lower risk of accidental exposure, but it also underscores the importance of updating to the latest version and double-checking security settings when loading models from untrusted sources.

What’s your forecast for the future of security in machine learning frameworks like Keras, given the increasing reliance on AI?

I think we’re going to see a lot more focus on securing machine learning frameworks as AI becomes even more integral to business and research. Vulnerabilities like this one in Keras highlight that these tools, while powerful, are also complex and can be exploited in ways traditional software might not be. I expect tighter integration of security-by-design principles, like stricter input validation and sandboxing, as well as more community-driven efforts to identify and patch flaws early. But it’s a cat-and-mouse game—attackers will keep finding creative ways to target AI systems, so we’ll need constant vigilance, better education for developers, and probably some regulatory push to enforce security standards in this space.