Diving into the ever-evolving world of cybersecurity, I had the privilege of sitting down with Malik Haidar, a seasoned expert with a wealth of experience in combating cyber threats across multinational corporations. With a unique blend of analytics, intelligence, and security know-how, Malik has a knack for weaving business perspectives into robust cybersecurity strategies. In our conversation, we explored the rise of AI-driven tools in penetration testing, the potential risks they pose when misused, and the murky waters of transparency surrounding some of the companies behind these innovations. We also delved into the specific capabilities of emerging frameworks and how they’re reshaping the threat landscape for both defenders and attackers.

How did you first come across the intersection of AI and penetration testing, and what excites you most about this development?

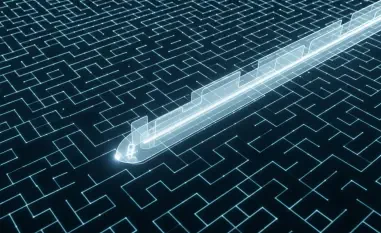

I’ve been tracking the integration of AI into cybersecurity for a while now, especially as it started gaining traction in automating complex tasks. My first real exposure came through observing how machine learning could analyze vast datasets to predict vulnerabilities faster than traditional methods. What excites me most is the potential for AI to revolutionize red teaming—simulating attacks with speed and precision that humans alone can’t match. It’s a game-changer for identifying weaknesses before they’re exploited, but it’s a double-edged sword, as we’re seeing with tools that can just as easily fall into the wrong hands.

Can you walk us through what makes AI-powered penetration testing tools stand out compared to traditional methods?

Absolutely. Traditional penetration testing often relies on manual processes—think painstakingly crafting exploits or sifting through logs for weak points. AI-powered tools, on the other hand, automate much of this workflow. They can scan thousands of systems simultaneously, adapt to failed attempts by tweaking their approach in real time, and even generate exploits using vast databases of prompts or past attack patterns. This scalability and adaptability mean faster, more thorough testing, but it also lowers the skill barrier, making sophisticated attacks accessible to less experienced individuals.

What are some of the broader implications of these tools becoming publicly available on platforms like Python Package Index?

When tools like these hit public repositories, they’re essentially democratizing advanced cyber capabilities. On one hand, that’s great for ethical hackers and organizations looking to bolster their defenses through accessible, cutting-edge resources. On the other, it’s a goldmine for cybercriminals. Anyone with basic coding knowledge can download, tweak, and weaponize these frameworks for malicious purposes—think ransomware campaigns or data theft at scale. The sheer accessibility ramps up the frequency and sophistication of attacks we’re likely to see.

How do you see the automation capabilities of AI tools impacting the cybersecurity landscape for both defenders and attackers?

For defenders, AI automation is a lifeline. It can prioritize alerts, correlate threats across sprawling networks, and even suggest remediation steps in real time, which is critical when you’re under siege. But for attackers, it’s just as empowering. Automation allows them to conduct reconnaissance, exploit vulnerabilities, and pivot through networks at unprecedented speeds. It’s like giving a chess player a supercomputer—every move is calculated faster and more efficiently. This creates a real challenge for enterprise security teams, who now face a higher volume of adaptive, automated threats.

What concerns do you have about the potential misuse of AI-driven frameworks by malicious actors?

My biggest concern is how these tools can be repurposed for harm with minimal effort. Features designed for legitimate testing—like remote access capabilities or keystroke logging—can easily become tools for surveillance or data theft in the wrong hands. We’ve seen this before with frameworks that started as legitimate but became staples in cybercrime. Add to that the AI’s ability to orchestrate complex attacks without much user expertise, and you’ve got a recipe for widespread abuse, from targeted espionage to mass exploitation campaigns.

How do you think the industry can balance the benefits of these powerful tools with the risks they introduce?

It’s a tough nut to crack, but I think it starts with stricter controls on distribution and usage. Developers could implement licensing or verification processes to ensure tools are only in the hands of legitimate users. At the same time, we need robust education and awareness campaigns to help organizations understand the dual-use nature of these technologies. On the regulatory side, governments and industry bodies should collaborate on frameworks that encourage innovation while setting clear boundaries to deter misuse. It’s about finding that sweet spot between empowerment and accountability.

What is your forecast for the future of AI in cybersecurity over the next few years?

I believe we’re just scratching the surface of what AI can do in this space. Over the next few years, I expect to see even deeper integration of AI into both offensive and defensive tools, with systems becoming more autonomous and predictive. We’ll likely see AI not just reacting to threats but anticipating them based on global threat intelligence feeds. However, this will also escalate the arms race with attackers, who’ll leverage the same tech for more evasive, personalized attacks. The challenge will be staying ahead of that curve, and I think collaboration across industries and borders will be key to managing the risks while harnessing the benefits.