The productivity boom promised by generative AI may be inadvertently creating one of the most significant and unseen security vulnerabilities for modern enterprises, operating quietly within the daily workflows of unsuspecting employees. This burgeoning threat, often termed “Shadow AI,” arises when staff members utilize personal accounts for powerful Large Language Models (LLMs) like ChatGPT, Google Gemini, and Microsoft Copilot to perform work-related tasks. While their intent is typically to enhance efficiency, this practice creates a critical blind spot for IT and security teams, as sensitive corporate data flows into unmonitored, third-party applications. Recent industry analysis from the Cloud and Threat Report for 2026 reveals a startling reality: nearly half of all employees who leverage generative AI at work are doing so through personal applications. This widespread, unsanctioned usage means that traditional data loss prevention protocols are bypassed, leaving a company’s most valuable information, from proprietary source code to strategic plans, dangerously exposed without any organizational oversight or governance.

The Escalating Scale of Unmonitored Data Exposure

The sheer volume of corporate data being funneled into these unsanctioned AI tools has reached an unprecedented scale, far outpacing the growth in user adoption alone. While the number of employees engaging with generative AI applications tripled over the last year, the amount of data and prompts sent to these platforms grew sixfold, a testament to their deepening integration into core business functions. In a typical organization, this translates to an average of 18,000 prompts per month, a significant increase from just 3,000 a year prior. For companies with highly engaged workforces, this figure can skyrocket to an astonishing 1.4 million prompts monthly. Each of these interactions represents a potential point of data leakage, where fragments of confidential information, customer data, or internal communications are absorbed by external models. This exponential increase in data transmission transforms these powerful productivity enhancers into potential conduits for a continuous, low-level drain of intellectual property that operates beyond the reach of conventional security measures.

This dramatic surge in unmonitored AI usage has directly led to a doubling of data policy violations over the past twelve months, creating tangible and immediate risks for businesses. An average organization now contends with approximately 223 such violations every month, often originating from a small but highly active group of users, constituting about 3% of the generative AI user base. These incidents are not minor infractions; they frequently involve the exposure of highly sensitive information, including proprietary source code, protected intellectual property, confidential financial data, and even system login credentials. The consequences of such leaks extend beyond the immediate loss of data, leading to severe compliance challenges with regulations like GDPR and CCPA, as well as the potential for significant financial penalties and lasting reputational damage. This constant stream of violations underscores a critical vulnerability where the pursuit of individual efficiency directly compromises collective corporate security, leaving organizations in a perpetual state of heightened risk.

The Evolving Threat Landscape and Proactive Governance

Beyond the immediate danger of accidental data leakage, a more sophisticated and insidious threat vector has emerged where malicious actors can actively exploit information previously entered into Large Language Models. By crafting highly specific and sophisticated prompts, attackers can potentially coax these AI models into revealing sensitive corporate data that has been absorbed and retained from the inputs of other users. This transforms the LLM from a passive repository of leaked information into an active tool that can be weaponized against the very organizations whose data it holds. Information gleaned in this manner, such as details about internal projects, organizational charts, or confidential business strategies, can then be used to orchestrate far more effective and convincing cyberattacks. For example, a phishing campaign armed with specific, non-public project details is significantly more likely to succeed, turning an organization’s own data into a powerful weapon for social engineering and targeted breaches.

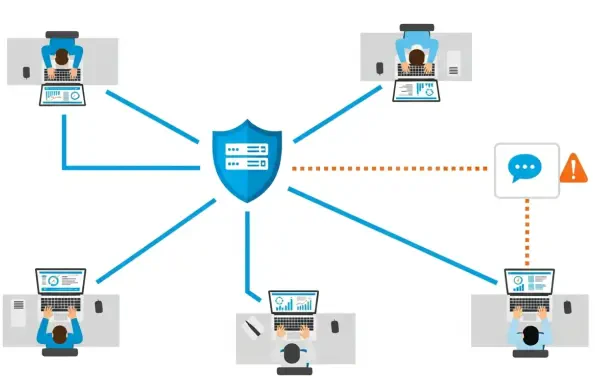

In response to this multifaceted threat, the consensus among cybersecurity experts is that organizations must urgently move to establish robust and comprehensive AI governance. The foundational step in this process is the implementation of effective policies and technological controls designed to provide maximum visibility into how and where AI tools are being used across the corporate network. This involves not only monitoring traffic to known AI platforms but also discovering and managing the use of previously unknown applications. However, technical solutions alone are insufficient. A critical component of a successful strategy is comprehensive employee education that focuses on clarifying what constitutes risky behavior. This means training staff to understand the inherent dangers of inputting sensitive data into personal AI accounts and guiding them toward the use of sanctioned, secure, enterprise-grade AI solutions. By striking a deliberate balance between enabling AI-driven productivity and enforcing strict security protocols, companies can begin to harness the benefits of this technology without exposing themselves to unacceptable levels of risk.

Charting a Course Toward Secure AI Integration

As organizations grappled with the pervasive threat of Shadow AI, a clear trend toward proactive governance began to yield measurable results. Although the risks associated with unsanctioned AI use remained substantial, evidence mounted that well-defined corporate policies were starting to make a significant impact on employee behavior. The most compelling indicator of this shift was the notable decline in the use of personal AI accounts for work-related activities, which dropped from 78% to 47% over the course of the past year. This reduction demonstrated that a concerted effort involving clear communication, employee education, and the deployment of new data governance strategies was not a futile exercise. The successful campaigns moved the corporate mindset from one of passive threat awareness to one of active risk mitigation. Companies that took decisive steps to monitor AI usage and provide secure, sanctioned alternatives found they could successfully curb the most dangerous forms of data exposure, establishing a viable blueprint for safely integrating artificial intelligence into their operations.