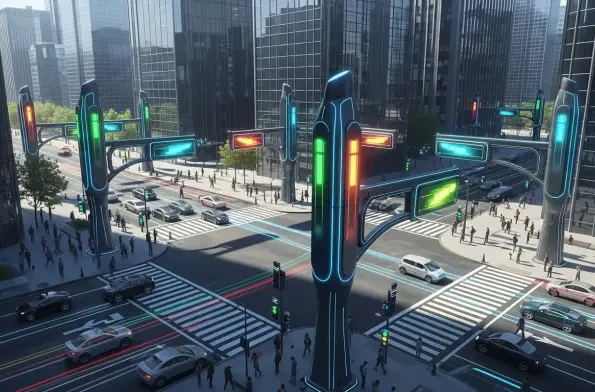

Imagine a world where power grids predict outages before they happen, water systems detect leaks in real-time, and transportation networks optimize traffic flow—all thanks to the power of artificial intelligence. This isn’t a far-off dream but a reality that critical infrastructure operators are beginning to explore. However, with this transformative potential comes a daunting challenge: ensuring that AI doesn’t compromise the very systems it aims to enhance. Recent guidance from the US Cybersecurity and Infrastructure Security Agency (CISA), in collaboration with international partners, offers a roadmap for navigating this delicate balance. The focus is on integrating AI into operational technology (OT) environments, which control vital public services, while prioritizing security and reliability. As AI continues to evolve, the stakes couldn’t be higher for industries that underpin daily life.

Navigating the Promise and Peril of AI in Critical Systems

Balancing Innovation with Risk Management

The allure of AI in critical infrastructure lies in its ability to boost efficiency, slash costs, and predict issues before they escalate. Machine learning, large language models, and AI agents can analyze vast datasets to streamline operations in power plants, water treatment facilities, and beyond. Yet, the unique environment of OT systems—where downtime can mean disaster—introduces risks that consumer-grade AI applications rarely face. A single error in an AI-driven system could disrupt essential services or expose sensitive data like engineering schematics. The recent guidance emphasizes understanding these dangers upfront. Operators must weigh the benefits against potential pitfalls, establishing robust governance to oversee AI deployment. This means not just adopting cutting-edge tools but ensuring they’re tailored to the high-stakes nature of infrastructure. Without this balance, the promise of innovation could quickly turn into a liability for entire communities.

Building a Culture of Secure Development

Beyond recognizing risks, fostering secure practices among staff is vital for safe AI integration. Too often, the rush to implement new technology overlooks the human element—employees who may lack training on AI-specific threats. The guidance urges organizations to prioritize education, embedding safety and security into every stage of development. This includes promoting transparency in how AI models are built and used, especially as vendors increasingly embed AI directly into devices. Operators should demand clarity on functionality and data policies to avoid hidden vulnerabilities. Additionally, aligning AI efforts with existing cybersecurity frameworks helps create a unified defense against threats. By cultivating a culture where caution and curiosity coexist, infrastructure teams can harness AI’s potential while minimizing blind spots. This proactive stance ensures that technology serves as a tool for progress rather than a source of unforeseen chaos.

Building a Framework for Safe AI Deployment

Protecting Sensitive Data and System Integrity

One of the thorniest challenges in bringing AI into OT systems is safeguarding sensitive information. Data like asset inventories or process measurements, crucial for training AI models, can become a goldmine for malicious actors if exposed. The collaborative guidance stresses the need for stringent data security protocols during every phase of AI implementation. Operators must ensure that information is anonymized or encrypted to prevent breaches that could jeopardize entire systems. Moreover, as cloud-based AI solutions grow in popularity, additional risks like latency or unauthorized access come into play. Testing in controlled environments before full deployment offers a way to spot weaknesses without real-world consequences. Regular updates to AI models further help patch vulnerabilities, ensuring that systems remain resilient. Protecting data isn’t just a technical issue—it’s a cornerstone of maintaining public trust in critical services that millions rely on daily.

Prioritizing Human Oversight and Compliance

Even with the most advanced AI, human oversight remains non-negotiable in high-stakes environments. The guidance underlines the importance of monitoring AI outputs to catch anomalies or errors before they spiral into crises. Fail-safe mechanisms must be in place to override automated decisions if something goes awry. This human-in-the-loop approach ensures that technology augments, rather than replaces, expert judgment in critical operations. Compliance with evolving international AI standards also plays a key role, as does conducting regular audits to verify system integrity. Integration with incident response plans allows for swift action if issues arise, minimizing disruption. By blending human vigilance with structured oversight, operators can navigate the complexities of AI without sacrificing reliability. This dual focus creates a safety net, allowing innovation to flourish within a framework of accountability.

Charting the Path Forward for AI in Infrastructure

Reflecting on a Cautious Yet Bold Approach

Looking back, the journey to integrate AI into critical infrastructure revealed a delicate dance between ambition and caution. The guidance issued by CISA and its global partners laid a solid foundation, addressing everything from data protection to human oversight with meticulous care. It became clear that while AI held immense potential to revolutionize operational efficiency, unchecked deployment risked catastrophic failures. The collaborative effort among international cybersecurity bodies underscored a shared commitment to safeguarding vital systems. Every step taken—whether in testing environments or aligning with global standards—reflected a determination to prioritize safety over speed. This unified perspective shaped a narrative of progress tempered by responsibility, ensuring that public services remained unshaken even as technology advanced.

Envisioning Future Safeguards and Collaboration

As the landscape of AI continues to shift, the next steps for operators centered on sustained vigilance and global cooperation. Embracing continuous monitoring and refinement of AI models emerged as a critical practice to keep pace with emerging threats. Strengthening partnerships across borders offered a way to share insights and strategies, building a collective defense against risks. Investing in workforce training also promised to empower teams to handle AI tools with confidence and care. Ultimately, the focus shifted to creating adaptive frameworks that could evolve alongside technology, ensuring that innovation never outstripped security. This forward-thinking mindset, rooted in lessons learned, aimed to secure critical infrastructure for the long haul while unlocking AI’s full potential.