As organizations increasingly adopt artificial intelligence (AI) to drive innovation and operational efficiency, the importance of embedding cybersecurity at every stage of AI adoption has never been more critical. This approach is essential to protect sensitive data, ensure resilience, and foster responsible innovation. While AI offers significant opportunities, it also expands an organization’s attack surface, making robust cybersecurity measures indispensable.

The Critical Need for Cybersecurity in AI

The Expanding Attack Surface

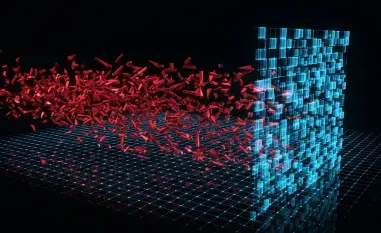

AI systems, with their ability to process and analyze vast amounts of data, are becoming integral to modern business operations. However, this integration also introduces new vulnerabilities. Unaddressed AI vulnerabilities, such as adversarial attacks, data poisoning, and algorithm hacking, can lead to detrimental outcomes, including data breaches, financial losses, and reputational damage. Organizations must embed cybersecurity measures throughout the AI lifecycle to mitigate these risks. AI systems’ enhanced data processing capabilities allow malicious actors to exploit these systems, causing significant harm. This makes it vital to foresee and address potential security threats proactively, embedding safeguards in every phase of AI adoption.

The dynamic nature of cyber threats means that as AI technologies evolve, so do the methods employed by those seeking to exploit them. Adversarial attacks can manipulate AI outputs through slight perturbations that are virtually invisible to human eyes but can mislead AI models. Data poisoning corrupts training datasets to produce flawed models, and algorithm hacking exploits weaknesses in the AI’s logic or design. These risks underscore the importance of continually updating and fortifying AI systems. Regular system audits, real-time monitoring, and seamless integration of security protocols into the AI development process can help organizations stay ahead of potential threats, ensuring AI-driven innovation remains secure and resilient.

Discrepancy in Preparedness

The Global Cybersecurity Outlook 2025 reveals that while 66% of organizations anticipate AI to have a substantial impact on cybersecurity in the coming year, only 37% have processes to evaluate the security of AI systems prior to deployment. This discrepancy underscores the urgency for organizations to adopt a more thorough and proactive approach to securing AI systems. Adequate preparedness requires prioritizing resource allocation to cybersecurity initiatives and seeking expertise in AI-specific security requirements. The risks of inadequate preparedness are too significant to ignore, making investment in security measures crucial.

Organizations must move beyond traditional reactive cybersecurity strategies and adopt a proactive stance. This involves not only identifying existing vulnerabilities but also anticipating future threats. Many organizations are currently underprepared due to a lack of specialized knowledge or insufficient resources dedicated to AI security. Bridging this gap requires a concerted effort to educate stakeholders on the unique risks associated with AI, enhance internal capabilities, and develop robust evaluation processes prior to AI deployment. By fostering a culture of proactive security and continuous improvement, organizations can ensure their AI systems are resilient against evolving threats, thereby safeguarding their operations and reputation.

Implementing a Risk-Based Approach

Assessing Potential Vulnerabilities

A risk-based approach to AI adoption involves assessing potential vulnerabilities and risks associated with AI implementation. This approach ensures that AI initiatives remain aligned with organizational goals and risk tolerance. By evaluating the potential impact of these risks on the business, organizations can identify necessary controls to mitigate them. Risk assessment should be a continuous process that involves regularly updating threat models based on the latest cybersecurity trends and findings. These updates help maintain an up-to-date understanding of potential vulnerabilities, ensuring that implemented controls are appropriate and effective.

In assessing AI-related risks, organizations must consider various factors, including data integrity, model robustness, and system transparency. Developing a clear map of AI implementations within the organization aids in identifying critical areas requiring heightened security measures. Additionally, organizations should leverage threat intelligence and advanced analytics to pinpoint areas of susceptibility. Taking these precautionary steps not only helps prevent security breaches but also builds a robust defense system capable of responding to threats effectively. This approach ensures that AI adoption aligns with both the innovative goals of the organization and its security requirements, promoting secure and sustainable growth.

Aligning with Organizational Goals

For businesses already using AI, this involves mapping implementations and applying security solutions. For others, it may involve conducting a risk-reward analysis to ensure AI adoption aligns with business objectives and operational needs. This fosters security by design and ensures that AI initiatives support the organization’s overall strategy. Engaging cross-functional teams from early stages can help align AI projects with the organization’s strategic objectives, yielding more comprehensive and secure outcomes. By embedding cybersecurity considerations into the foundational design and development of AI systems, organizations can avoid potential pitfalls and enhance system resilience.

Organizations should factor in their specific industry requirements, compliance obligations, and regulatory landscapes when aligning AI initiatives with security goals. This involves establishing clear policies and guidelines for AI development, employing rigorous testing and validation procedures, and ensuring ongoing oversight and maintenance. Collaboration between IT, cybersecurity, and business units is essential to create a cohesive strategy that integrates security objectives with business goals. By doing so, organizations can not only safeguard their AI investments but also achieve a competitive advantage through secure and innovative AI applications.

Integrating Cybersecurity into Governance

Embedding Controls in Business Processes

Organizations must consider AI systems within the broader context of their business processes and data flows. Integrating cybersecurity controls into governance structures and enterprise risk management processes can help minimize the impact of any potential failures in AI systems. This holistic approach ensures that cybersecurity is not an afterthought but an integral part of AI adoption. Effective governance involves developing policies that detail security protocols, assigning roles and responsibilities, and ensuring accountability at every level. It also requires continuous monitoring and auditing to assess the effectiveness of these controls and make necessary adjustments in response to new threats.

Embedding cybersecurity in AI governance means treating security as a fundamental component of all business activities, rather than a separate or secondary concern. This integration should extend across all levels of the organization, from executive leadership to operational teams. Involving stakeholders, including AI developers, IT personnel, and end-users, ensures a comprehensive understanding of potential risks and promotes a culture of shared responsibility. Regular training and awareness programs can further reinforce this culture, equipping employees with the knowledge and skills to recognize and respond to security threats effectively. By embedding robust cybersecurity practices into the fabric of the organization, businesses can better protect their AI systems and enhance overall resilience.

Building a Culture of Security

Leaders play a crucial role in championing a culture where cybersecurity is seen as integral to innovation rather than a hindrance. This involves strategic alignment of AI initiatives with a comprehensive cybersecurity framework to reassure stakeholders about the organization’s commitment to safeguarding digital assets. Building such a culture fosters trust and confidence in the organization’s AI-driven initiatives. Leaders must advocate for sustained investment in cybersecurity, prioritize transparency in risk communication, and establish clear channels for feedback and improvement. They must also recognize the importance of ethical AI practices, emphasizing responsible data use and ensuring compliance with privacy regulations.

Creating a security-first mindset requires ongoing efforts from leadership to embed these values into the organization’s core principles. Regular engagement with employees, clear communication of security goals, and recognition of best practices can solidify this culture. Leaders should also facilitate continuous learning opportunities to keep the workforce updated on emerging security trends and threats. By promoting a culture of security, organizations can enhance their defense mechanisms, reduce the risk of breaches, and create a resilient foundation for AI innovation. Building trust with stakeholders, including customers, partners, and regulators, solidifies the organization’s reputation as a secure and reliable entity capable of leveraging AI effectively.

Collaborative Responsible Innovation

Multistakeholder Approach

To address AI-driven vulnerabilities and safeguard investments, organizations should adopt a multistakeholder approach. This involves collaboration between AI and cybersecurity experts, regulators, and policymakers. By prioritizing risk-reward analysis and cybersecurity, these stakeholders can work together to foster responsible and resilient AI innovation. Engaging a diverse group of stakeholders ensures that multiple perspectives are considered, promoting more comprehensive and balanced security strategies. Public-private partnerships, industry consortiums, and academic collaborations can further enhance these efforts by combining knowledge, resources, and expertise to tackle complex security challenges.

Effective collaboration requires clear communication channels and defined roles among stakeholders. Establishing forums for regular dialogue, joint decision-making processes, and shared frameworks for risk assessment and mitigation can facilitate productive cooperation. Participating in industry standards development and adhering to best practices also helps align efforts across different organizations and sectors. By working together, stakeholders can address the broader implications of AI adoption, such as ethical considerations, regulatory compliance, and societal impact. This collaborative approach not only strengthens cybersecurity measures but also promotes the responsible and equitable use of AI technologies on a global scale.

Fostering Trust and Confidence

As organizations increasingly integrate artificial intelligence (AI) to drive both innovation and operational efficiency, the need to embed cybersecurity within every stage of AI implementation has never been more crucial. The importance of this approach cannot be overstated, as it is fundamental to safeguarding sensitive data, maintaining resilience, and promoting responsible innovation. While AI brings a host of substantial opportunities, it also broadens an organization’s potential vulnerability to cyber-attacks, emphasizing the necessity of solid cybersecurity practices. By incorporating robust security measures, organizations can mitigate risks associated with AI, ensuring that the benefits of AI adoption outweigh potential drawbacks. Furthermore, this proactive stance helps in building trust among stakeholders and clients, as they can be assured that their data is handled with the utmost security considerations. In conclusion, as AI continues to evolve and revolutionize various sectors, the intertwining of AI advancements with stringent cybersecurity protocols remains paramount for sustainable and responsible progress.