What happens when the tools designed to make life easier become the very instruments of digital destruction? Picture this: a busy professional skims an AI-generated summary of an email, trusts the concise directive to run a quick command, and unknowingly triggers a ransomware attack that locks down an entire corporate network. This isn’t a far-fetched scenario but the chilling reality of the ClickFix attack, a proof-of-concept exploit that weaponizes artificial intelligence summaries to spread malware. As AI integrates deeper into daily digital interactions, this emerging threat reveals a sinister side to innovation, demanding immediate attention from individuals and organizations alike.

The Stakes of Trust in an AI-Driven World

The significance of this cyber threat cannot be overstated. With ransomware attacks costing businesses an estimated $20 billion annually and social engineering tactics evolving rapidly, ClickFix represents a new frontier in deception. Unlike traditional phishing emails that often raise red flags, this exploit hides behind the credibility of AI tools—think email clients or browser extensions that summarize content for convenience. The danger lies in the implicit trust placed in these summaries, making users far more likely to act on malicious instructions without a second thought. This isn’t just a technical glitch; it’s a profound breach of the confidence society has built in technology.

This growing menace underscores a broader trend in cybersecurity: as AI becomes ubiquitous, so does its potential for misuse. The ClickFix attack isn’t an isolated incident but a signal of how cybercriminals are shifting focus from exploiting systems to manipulating human behavior. With millions relying on AI for efficiency, the scale of potential damage is staggering, positioning this issue at the forefront of digital security challenges.

Unmasking the Mechanics of a Deceptive Exploit

At its core, the ClickFix attack is a masterclass in deception, blending technical cunning with psychological manipulation. Attackers embed malicious instructions into web pages, emails, or blog posts using CSS obfuscation techniques such as hidden text (white-on-white), zero-width characters, or off-screen positioning. By overloading the content with these concealed directives, they force AI summarizers to prioritize harmful commands in their condensed outputs, often urging users to paste a dangerous PowerShell script into a Windows Run prompt.

The brilliance—and terror—of this approach lies in its subtlety. Unlike overt phishing attempts, these instructions appear to come directly from trusted AI tools, bypassing the usual skepticism. A user might see a summary suggesting a quick system update or fix, only to initiate ransomware that encrypts critical data. This method exploits not just technology but the human tendency to trust automated recommendations, creating a perfect storm of vulnerability.

Historical parallels add weight to the threat’s credibility. Previous ClickFix-related tactics have seen threat actors impersonate major brands or infect streaming platforms with fake verification challenges, impacting diverse sectors. The addition of AI manipulation amplifies the reach, as tainted content can spread through search engines or targeted campaigns, potentially affecting countless users in a single sweep.

Voices from the Frontline of Cybersecurity

“This exploit doesn’t just hack systems; it hacks trust,” cautions Dharani Sanjaiy, a vulnerability researcher who has extensively studied this attack. Sanjaiy’s team found that users often follow AI-generated prompts without hesitation, with early tests showing a disturbingly high compliance rate due to the perceived legitimacy of the source. This psychological edge makes the attack uniquely effective, turning a tool of convenience into a weapon of chaos.

Cybersecurity professionals echo this concern, noting the rapid pace at which attackers adapt to new technologies. Reports from organizations hit by earlier ClickFix campaigns reveal the devastating aftermath of seemingly innocuous clicks—lost data, hefty ransoms, and months of recovery. As AI integration deepens across industries, experts warn that such exploits could become commonplace, urging a reevaluation of how much faith is placed in automated systems.

One industry analyst, speaking on condition of anonymity, highlighted the systemic challenge: “The more we rely on AI to filter and summarize, the more we open ourselves to tailored deception. It’s not just about patching software; it’s about rethinking user behavior.” This sentiment captures the dual battle—technical and human—that defines the fight against weaponized AI.

The Expanding Threat of Tech Turned Toxic

Beyond the immediate mechanics, the ClickFix exploit signals a troubling shift in the cyber threat landscape. Attackers are no longer content with brute-force hacks; they’re leveraging cutting-edge advancements to outpace defenses. With AI summarizers becoming standard in productivity suites, email platforms, and even mobile apps, the attack surface grows exponentially, offering countless entry points for ransomware deployment.

This trend also reveals a harsh truth about innovation: every step forward creates a shadow of risk. As organizations rush to adopt AI for competitive advantage, insufficient safeguards often leave gaps that cybercriminals eagerly exploit. Studies suggest that over 60% of enterprises lack specific protocols for securing AI tools, a statistic that paints a grim picture of preparedness against such sophisticated attacks.

The potential scale of impact adds urgency to the issue. Malicious content crafted for this exploit can be indexed by search engines, shared on public forums, or sent directly to high-value targets, creating a ripple effect across digital ecosystems. This isn’t a niche problem but a pervasive one, demanding a collective response to curb the misuse of technology meant to enhance lives.

Building Defenses Against Invisible Threats

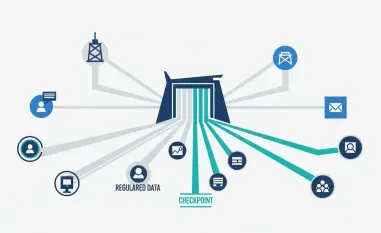

Combating an exploit as insidious as ClickFix requires a multi-layered approach that blends technology with awareness. One critical step is sanitizing AI inputs by preprocessing HTML content to remove suspicious CSS attributes like hidden text or tiny fonts before summaries are generated. Such measures prevent malicious instructions from slipping through the cracks of automated systems.

Equally important is deploying systems for payload recognition, designed to detect patterns in harmful scripts within AI outputs. At the enterprise level, strict policies on AI usage must be enforced, including scanning inbound documents and web content for concealed directives as part of secure gateways. These technical defenses form the backbone of a robust strategy to neutralize the threat before it reaches end users.

Human vigilance remains a cornerstone of protection. Training programs should emphasize the importance of questioning even AI-generated instructions, particularly those prompting system commands or script execution. By integrating these safeguards into email clients, browser extensions, and other tools—while fostering a culture of skepticism—organizations and individuals can significantly reduce their exposure to this evolving danger.

Reflecting on a Battle Fought and Lessons Learned

Looking back, the emergence of the ClickFix attack served as a stark reminder of technology’s dual nature—capable of immense good yet equally prone to exploitation. It exposed how deeply trust in AI had been woven into digital habits, and how swiftly that trust could be turned against unsuspecting users. The stories of breached systems and encrypted data lingered as cautionary tales in the cybersecurity community.

Yet, from those challenges came actionable progress. Organizations began fortifying their defenses with advanced sanitization tools and stricter AI policies, while user education campaigns gained traction in promoting critical thinking toward automated prompts. Moving forward, the focus shifted to collaborative innovation—developing AI systems with built-in security layers and fostering industry-wide standards to outmaneuver adaptive threats. This ongoing effort stands as a testament to resilience, ensuring that technology remains a tool for empowerment rather than a gateway for harm.